4 Introduction to Statistical Modelling with Implementation in R

This module contains an introduction to the concepts of statistical modelling, covering the key types of models, starting with simple linear regression and progressing to more complex models with implementation in R. Module 4 also introduces various methodologies for model selection, model prediction and cross-validation.

4.1 Concept of statistical modelling

4.1.1 Overview of statistical modelling

Statistical modelling, also known as regression analysis, can be described as a statistical technique used for exploring the relationship between a dependent variable (also called the outcome or response variable) and one or more independent variables (also called the explanatory variable or covariates). Data is viewed as being generated by some random process from which conclusions can be drawn. Statistical models help to understand these processes in greater depth, something that can be of interest for multiple reasons.

- Future predictions can be made from understanding the random process

- Decisions can be made based on the inference from the random process

- The random process itself may be of scientific interest

For example, statistical modelling can be used to find out about the relationship between rainfall and crop yields, or the relationship between unemployment and poverty.

Suppose for \(i=1,\cdots,n\) observations we have the observed responses \[ \boldsymbol{y}=\begin{pmatrix} y_1 \\ y_2 \\ \vdots \\ y_n \end{pmatrix}, \] where each \(y_i\) has an associated vector of values for \(p\) independent variables

\[ x_i=\begin{pmatrix} x_{i1} \\ x_{i2} \\ \vdots \\ x_{in} \end{pmatrix}. \]

The observed responses \(\boldsymbol{y}\) are assumed to be realisations of the random variables denoted by \[ \boldsymbol{Y}=\begin{pmatrix} Y_1 \\ Y_2 \\ \vdots \\ Y_n \end{pmatrix}. \] Alternatively, the random variable \(Y_i\) is the predicted (or fitted) value of the observed response \(y_i\).

The use of either upper or lower case is important in statistical modelling as the case used indicates to whether the variable is fixed (e.g. the observed responses \(\boldsymbol{y}\) ) or random (e.g. the predicted responses \(\boldsymbol{Y}\) ). The variability in the independent variable(s) is not modelled, therefore, it is treated as a fixed (not random) variable, hence given in lower case, \(x_i\).

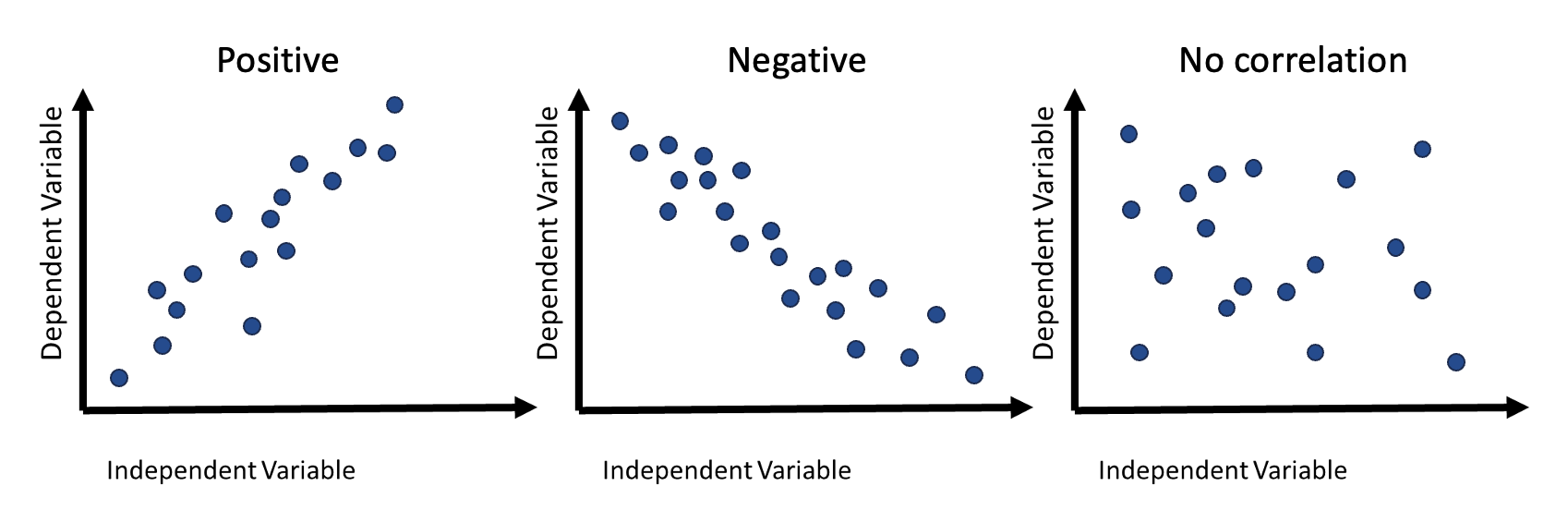

4.1.2 Correlation

The relationship between variables can be determined by the extent to which they are related, also known as correlation. Correlation can either be positive, negative, or it does not exist (no correlation). What each type of correlation means is as follows:

- Positive: as the value of one variable increases, the value of another increases

- Negative: as the value of one variable decreases, the value of another decreases

- No correlation: the value of one variable does not affect the value of another variable

A visual example of correlation can be seen in the figure below.

(#fig:image correlation)Visual examples of the different types of correlation

Exercise: Identify the dependent and independent variables in the following sentence:

A researcher investigates the effects of school density on the grades of its pupils.

4.1.3 Scaled covariates

When using more than one covariate in the modelling, it is important that they are given in the same units for comparison purposes. In the cases where they are not in the same units, covariates can be scaled to ensure the results are comparable.

The two main methods for scaling are as follows:

- Centring: subtract each value from the mean value

- Z-score: subtract each value from the mean value and divide by the standard deviation

4.1.4 Uncertainty

Uncertainty and error is unavoidable when estimating and predicting. The less error in your predictions, the more reliable the results are, therefore, it is important to measure the error margin when modelling. This can be done by measuring how close (or far) the predicted value is from the mean value. Alternatively, confidence intervals (in frequentest/classical statistics) or credible intervals (in Bayesian statistics) can be used. In this chapter, the focus is on frequentest statistics so confidence intervals will be used, Bayesian statistics will be introduced in Module 6.

4.2 Simple regression

There are some important assumptions required for simple regression modelling, given as follows.

- Normality of the response/residuals (e.g. histograms, Q-Q plot)

- Linear relationship between response and predictors (e.g. scatter plot, residuals vs fitted plot)

- Homoscedasticity - constant variance of the residuals (e.g. spread-location plot)

- Independence between predictors - no multicollinearity (e.g. use

Corr(X1, X2)function, should be approximately equal to 1)

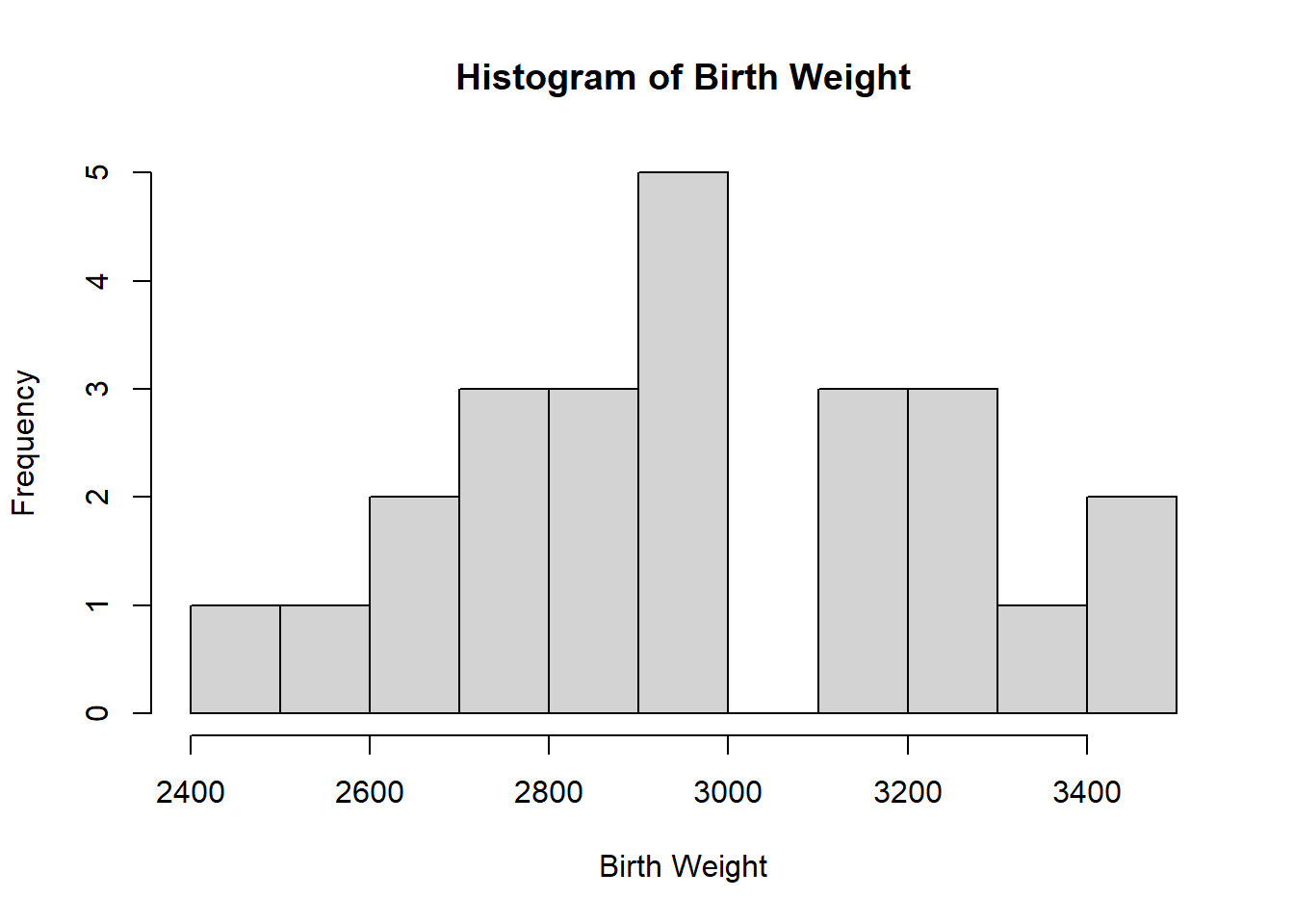

To check that the response follows the normality assumption, plots can be used. If the normality assumption holds, the shape of the histogram will resemble the shape of a bell curve, as seen in the example below using the birth data. This dataset has the dependent variable Weight for the birth weight (in grams) of 24 newborn babies with 2 independent variables, Sex for the sex and Age for the gestational age (in weeks) of the babies, where Sex is a categorical variable with values

\[\text{Sex} = \begin{cases} 1 & \text{if male, or} \\ 2 & \text{if female}. \end{cases}\]

#create birth weight dataset

birth <- data.frame(

Sex= c(1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2),

Age=c(40,38,40,35,36,37,41,40,37,38,40,38,40,36,40,38,42,39,40,37,36,38,39,40),

Weight=c(2968,2795,3163,2925,2625,2847,3292,3473,2628,3176,3421,2975,3317,

2729,2935,2754,3210,2817,3126,2539,2412,2991,2875,3231))#plot a histogram of the birth weights

hist(birth$Weight, breaks=10,

main="Histogram of Birth Weight",

xlab = "Birth Weight")

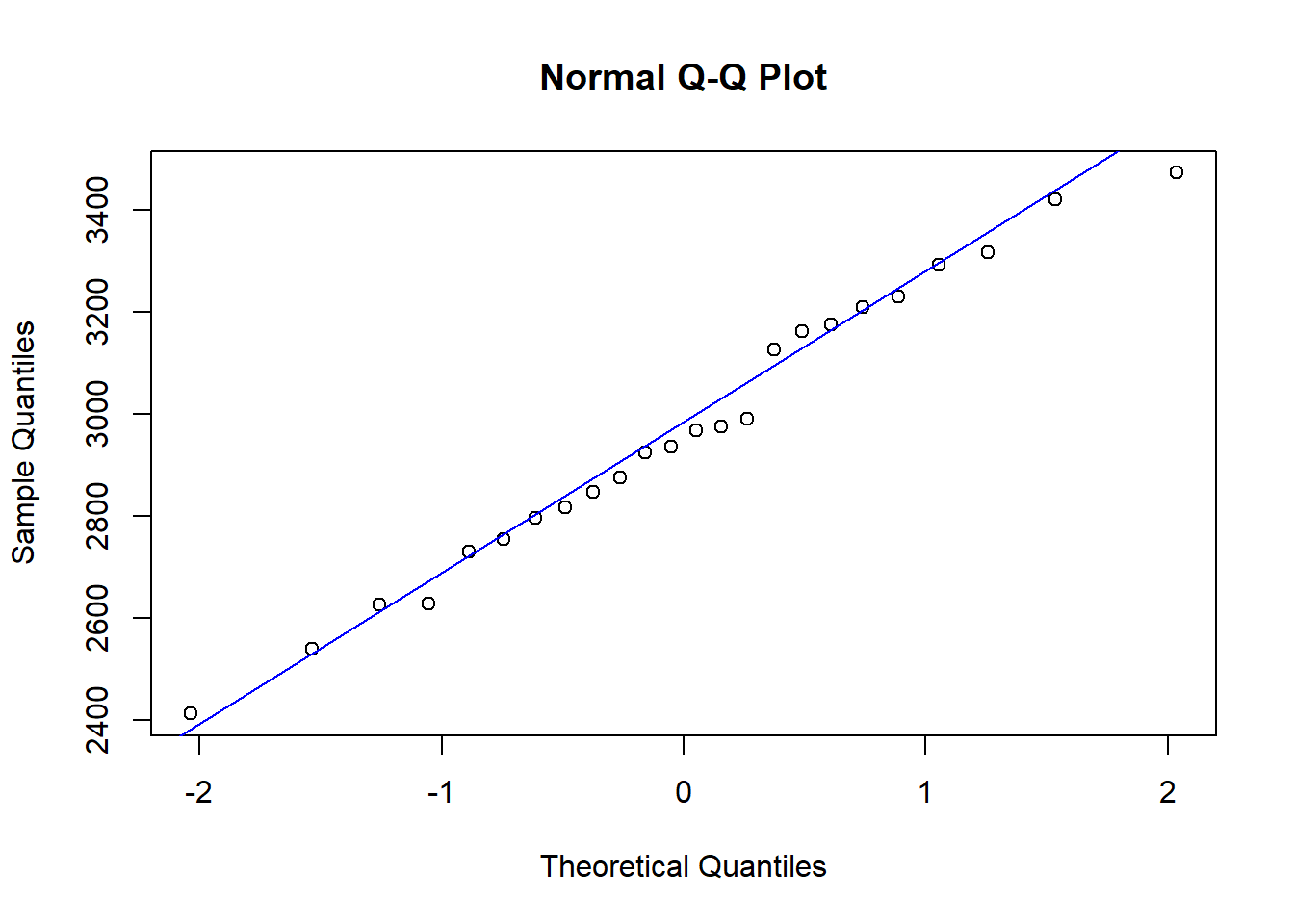

Alternatively, a Q-Q (quantile-quantile) plot can be used to assess the validity of the normality assumption, which plots the theoretical quantiles against the sample quantiles. If the normality assumption holds, the points on the plot will approximately follow a straight line. To plot this in R, the function qqnorm() can be used with an argument for the response that is being assessed, with the function qqline() being used with the response as an argument to add a reference line, making it easier to see whether the relationship is in fact linear. This is demonstrated below with the birth dataset, where it can be seen that the normality assumption holds given that the points on the Q-Q plot approximately follow the straight line given. However, in the case where the normality assumption does not hold, transformations such as the log-transformation can be used. If transformations are not appropriate, such as when the data is count or binary, alternative methods of modelling are required, leading to generalised linear modelling discussed later in this module.

#qq plot of the birth weight data

qqnorm(birth$Weight)

#add a reference line to the plot

qqline(birth$Weight, col="blue")

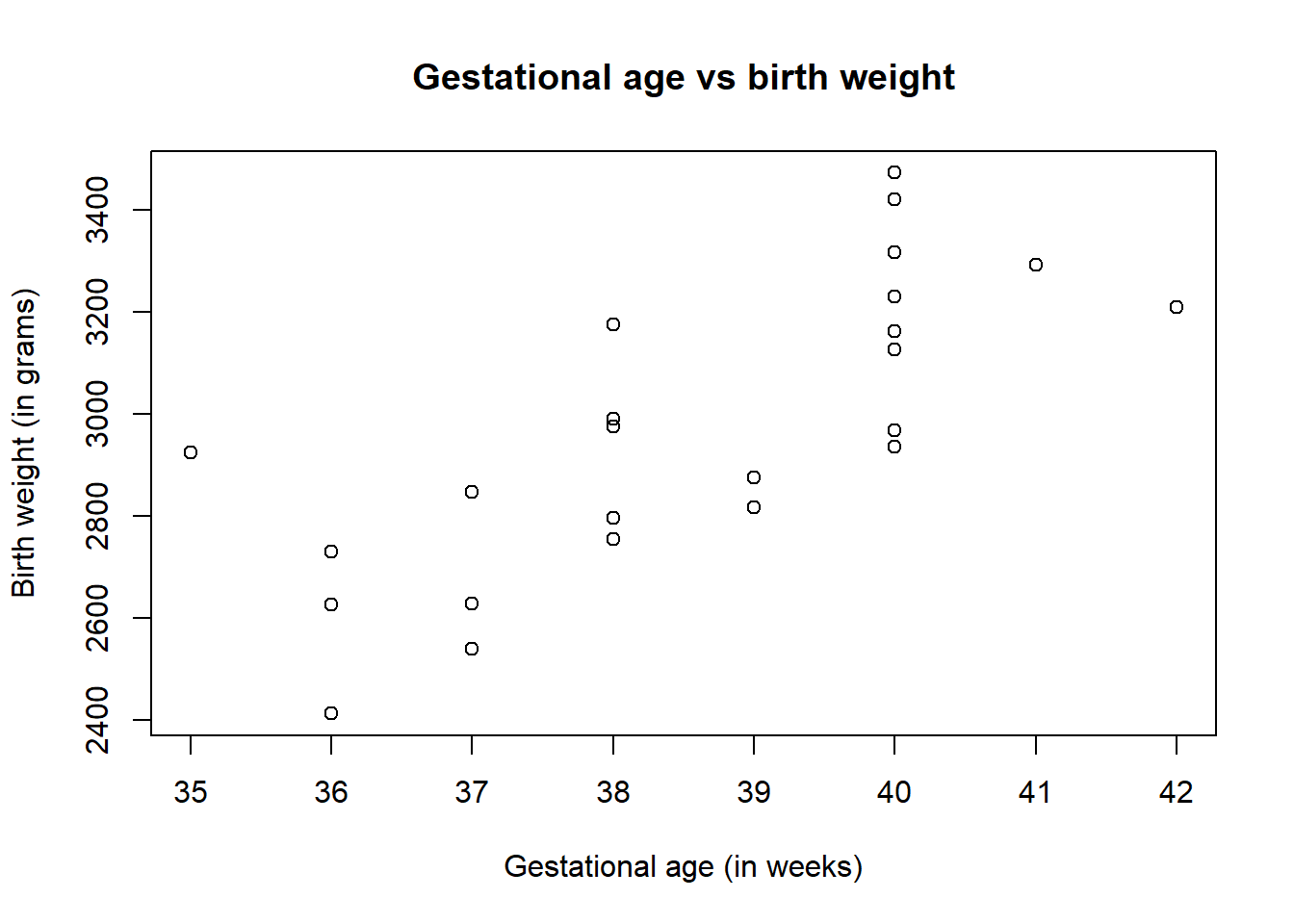

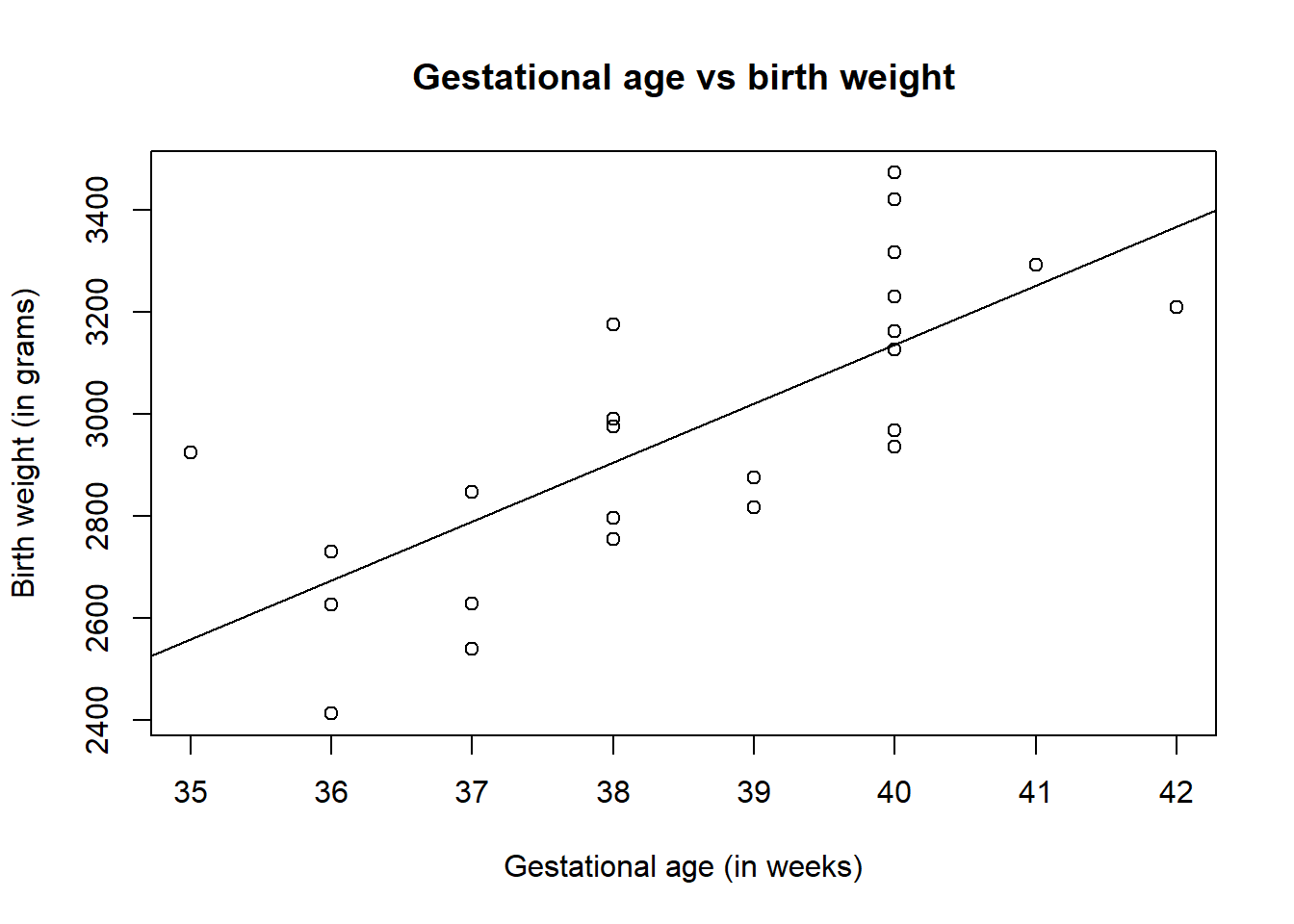

Using the plotting techniques discussed in Module 2, exploratory analysis can be conducted on the data, checking the assumption of a linear relationship between the response and predictors. Through using the plot() function with the response and the predictor you wish to test as arguments, if the resulting scatter plot has a linear trend, then this assumption is met.

#scatter plot for linear assumption

plot(birth$Age, birth$Weight,

xlab = "Gestational age (in weeks)",

ylab = "Birth weight (in grams)",

main = "Gestational age vs birth weight")

The plot of gestational age vs birth weight shows that there is a positive correlation between the two variables, indicating that as gestational age increases, the birth weight also increases, where the points roughly follow a straight line indicating that the assumption of linearity between the response and predictor is met.

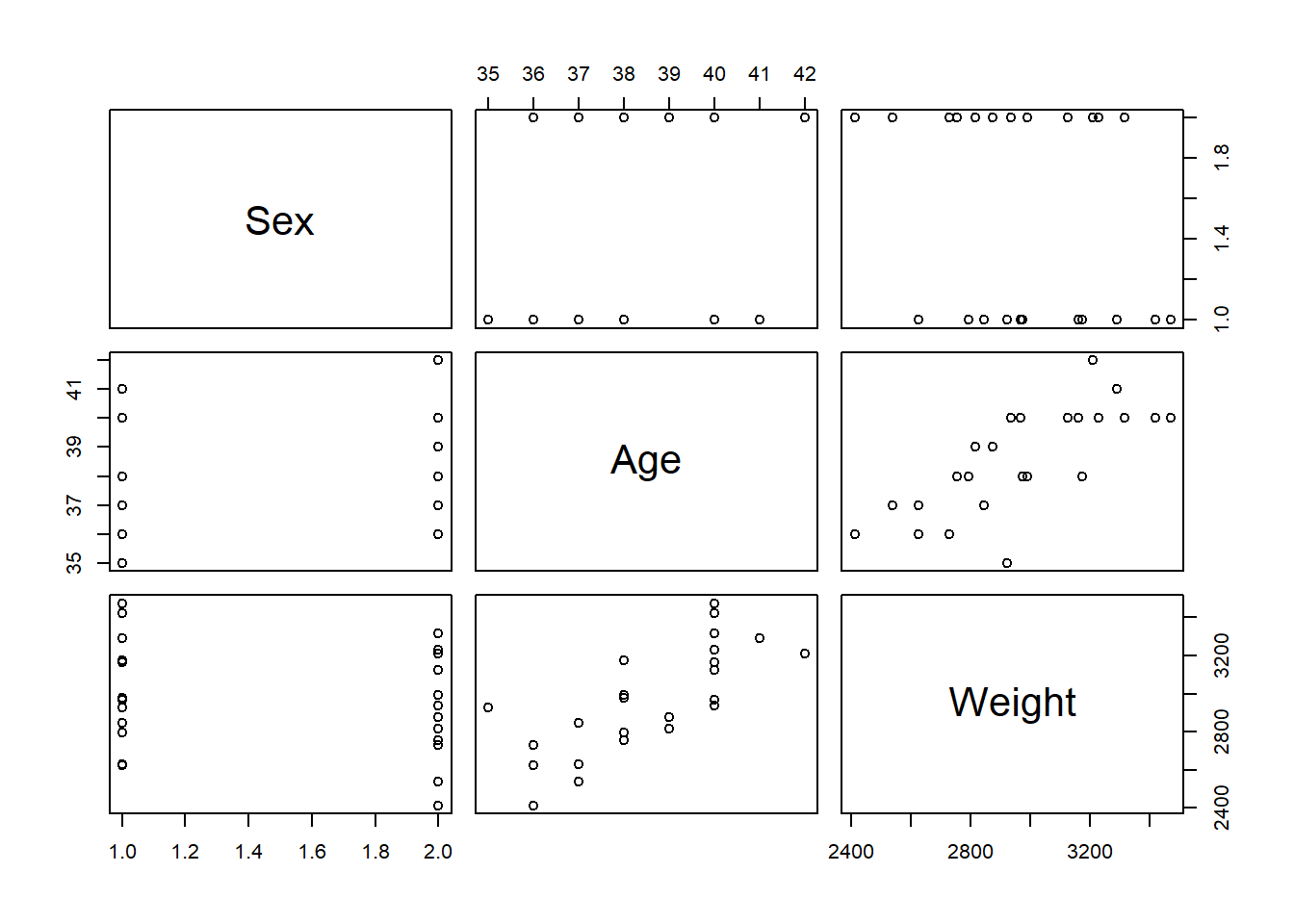

Another way to test this assumption is to use the pairs() function in R, where when the dataset of interest is included as an argument, it provides a matrix of scatter plots that show the relationship of each combination of variables available in the data.

The scatter plots for Age and Weight indicate that the relationship between the variables is approximately linear and therefore the assumption is met. However, if this assumption was not upheld, then appropriate transformations could be used, for example, a log-transformation. This is discussed more in the non-linear regression modelling section.

Alternative ways of checking assumptions require for the model to be fitted first, these methods will be discussed in the following sections.

4.2.1 Linear regression

Simple linear regression is a regression model which has a linear relationship due to the dependent variable depends only on one independent variable, alternatively, the independent variable is conditioned only on one dependent variable. A key concept of the simple linear regression model is that it is assumed each response follows a normal distribution, \(Y_i \sim N(\mu_i, \sigma^2)\). The simple linear regression model can be written as \[ Y_i = \beta_0 + \beta_1 x_i + \epsilon_i,\]

where \(\beta_0\) is the \(y-\)intercept, \(\beta_1\) is the slope and the errors are independent and identically distributed as \(\epsilon\sim N(0, \sigma^2)\) for \(i=1,...,n\). The error can be described as the random difference between the value of \(Y_i\) and \(\beta_0 +\beta_1 x_i\) which is the value of its conditional mean.

An example of a simple linear regression can be seen below with the birth dataset. The birth weight of each baby can be modelled using either the gestational age or sex of the individual for a simple linear regression model. In this example we will focus on predicting the birth weight of a baby using the gestational age.

\[\text{Weight}_i = \beta_0 + \beta_1\text{Age}_i + \epsilon_i\] where \(\epsilon_i \sim Normal(0, \sigma)\).

To do linear modelling in R, the function lm() can be used with an argument for the model formula. When fitting a simple linear regression model, the function glm(), used for fitting generalised linear models, yields identical results to the function lm(). Generalised linear models will be discussed later in the module.

#fit a linear model for birth weight by gestational age

birth_simple_lm <- lm(Weight ~ Age, data = birth)

birth_simple_lm##

## Call:

## lm(formula = Weight ~ Age, data = birth)

##

## Coefficients:

## (Intercept) Age

## -1485.0 115.5#fit the linear model using the function glm

birth_simple_glm <- glm(Weight ~ Age, data = birth)

birth_simple_glm #results are identical to the results from the lm function##

## Call: glm(formula = Weight ~ Age, data = birth)

##

## Coefficients:

## (Intercept) Age

## -1485.0 115.5

##

## Degrees of Freedom: 23 Total (i.e. Null); 22 Residual

## Null Deviance: 1830000

## Residual Deviance: 816100 AIC: 324.5

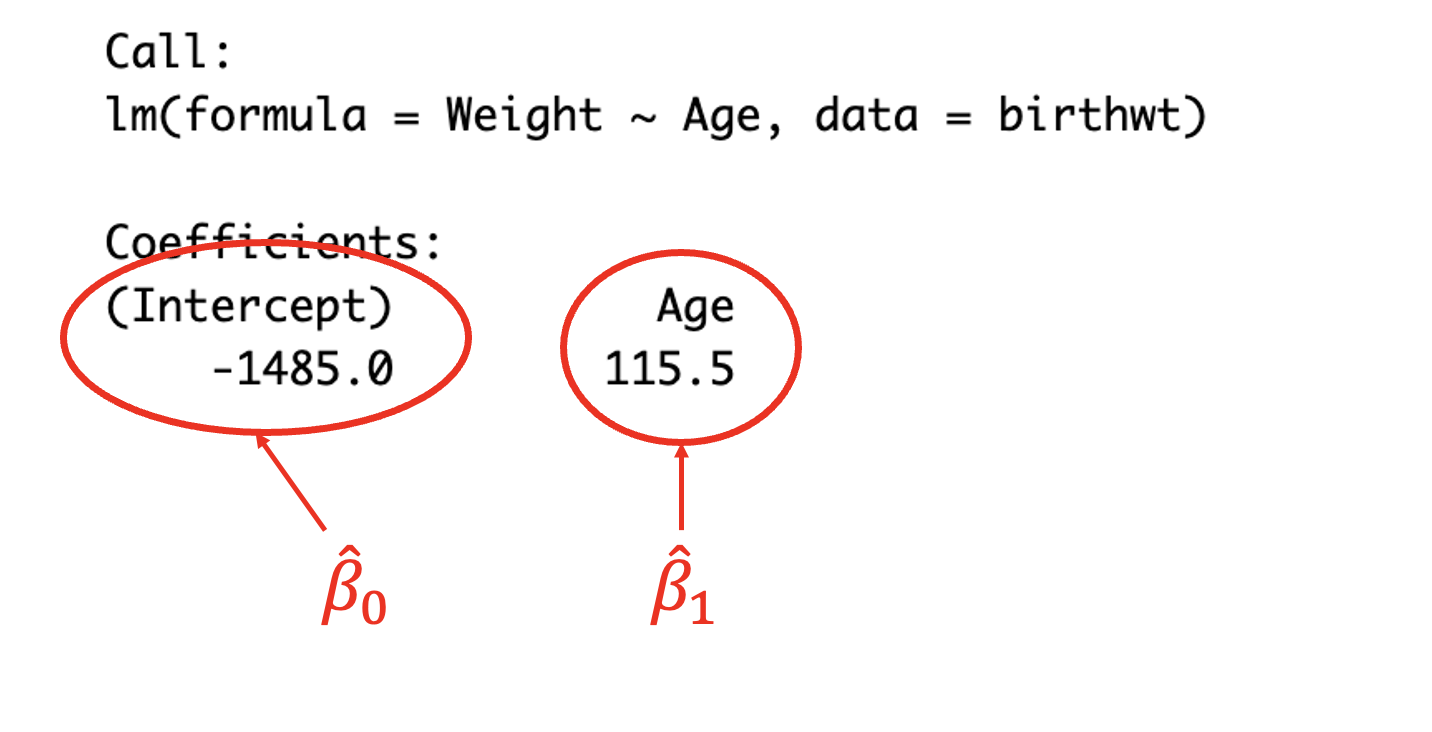

(#fig:image lm summary)Reading the coefficients from the model output

The output of the model gives the resulting coefficients. (Intercept) corresponds to the \(y-\)intercept \(\beta_0\), in this case \(\beta_0=-1485.0\) meaning that if \(\text{Age}=0\) then the birth weight would be -1485.0g. The coefficient Age corresponds to the slope \(\beta_1\), in this case \(\beta_1=115.5\), meaning that for each (1) unit increase in Age, the birth weight will increase by 115.5g.

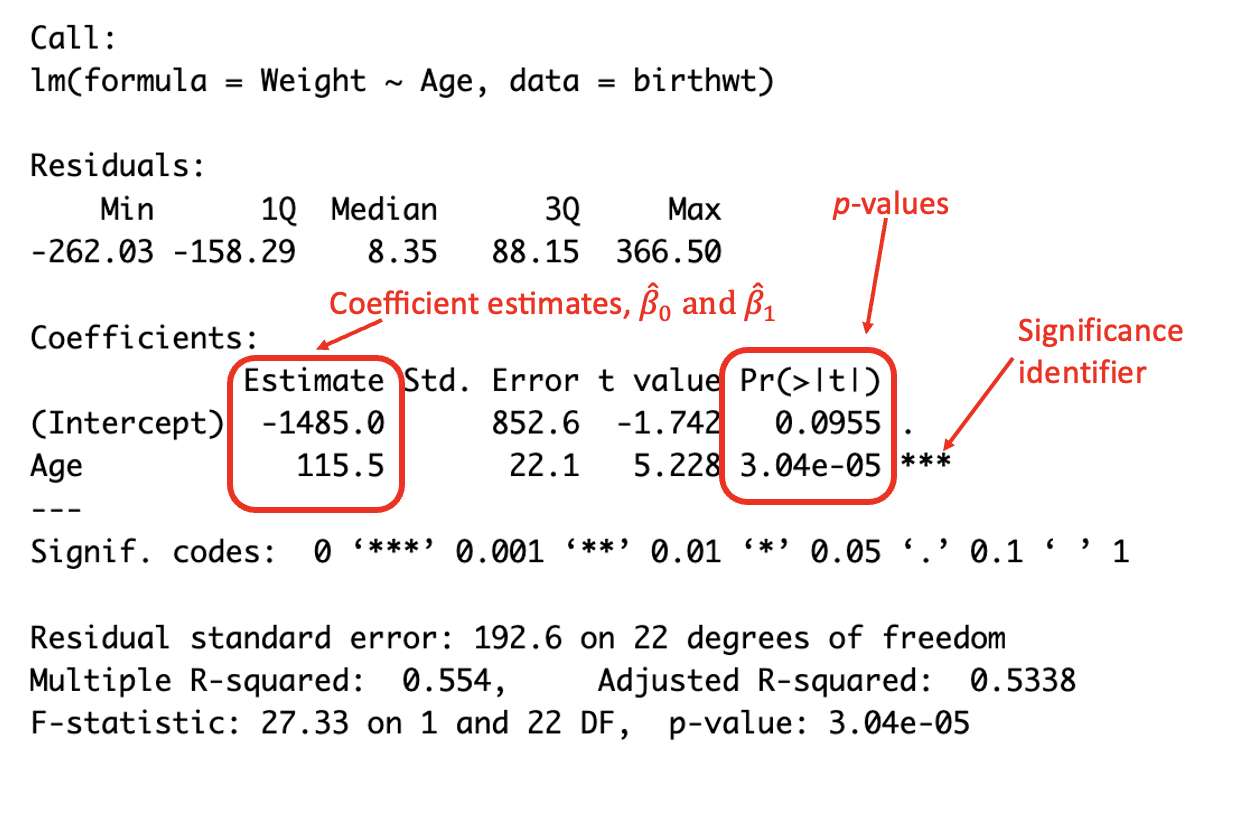

Including the linear model as an argument in the function summary() in R provides summary statistics for the linear model. These statistics include the previously given coefficients, as well as the corresponding standard errors and p-values (probability values) for the coefficients (among other information). This function is beneficial for exploring whether the independent variables are statistically significant and improve the model, as a small p-value (typically chosen to be less than 0.05) indicates that there is a statistically significant relationship between variables.

##

## Call:

## lm(formula = Weight ~ Age, data = birth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -262.03 -158.29 8.35 88.15 366.50

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1485.0 852.6 -1.742 0.0955 .

## Age 115.5 22.1 5.228 3.04e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 192.6 on 22 degrees of freedom

## Multiple R-squared: 0.554, Adjusted R-squared: 0.5338

## F-statistic: 27.33 on 1 and 22 DF, p-value: 3.04e-05

(#fig:image coef summary)Interpreting the summary output

In this case, it can be seen that the p-value for the Age covariate is \(<0.05\) and is therefore statistically significant to the 5% level and improves the model (and therefore should remain in the model).

A line of best fit using the linear model can be added to the above plot through using the function abline(), adding the entire linear model as an argument of the function.

#plot birth weight against gestational age

plot(birth$Weight ~ birth$Age,

xlab = "Gestational age (in weeks)",

ylab = "Birth weight (in grams)",

main = "Gestational age vs birth weight")

#add line for linear model

abline(birth_simple_lm)

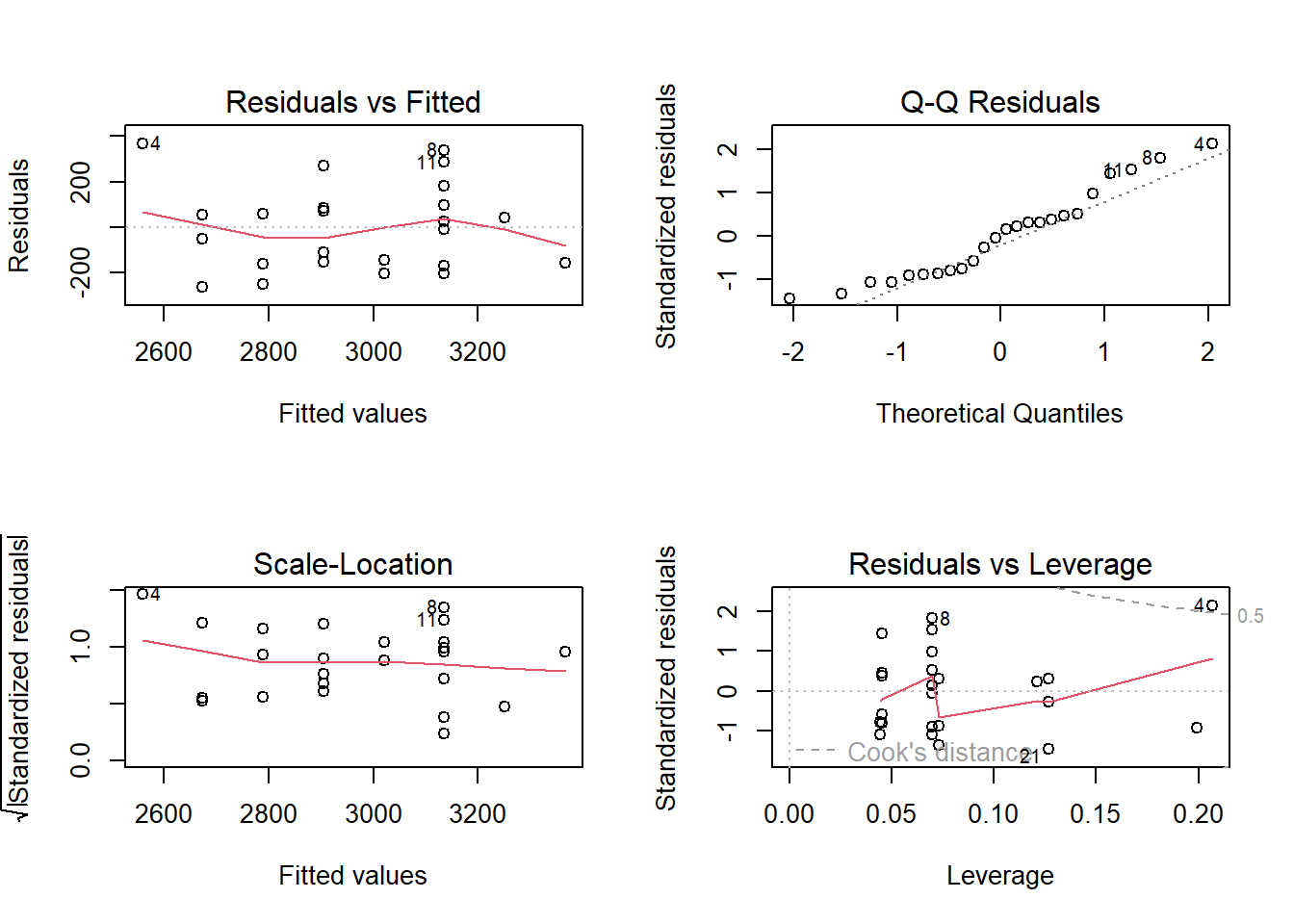

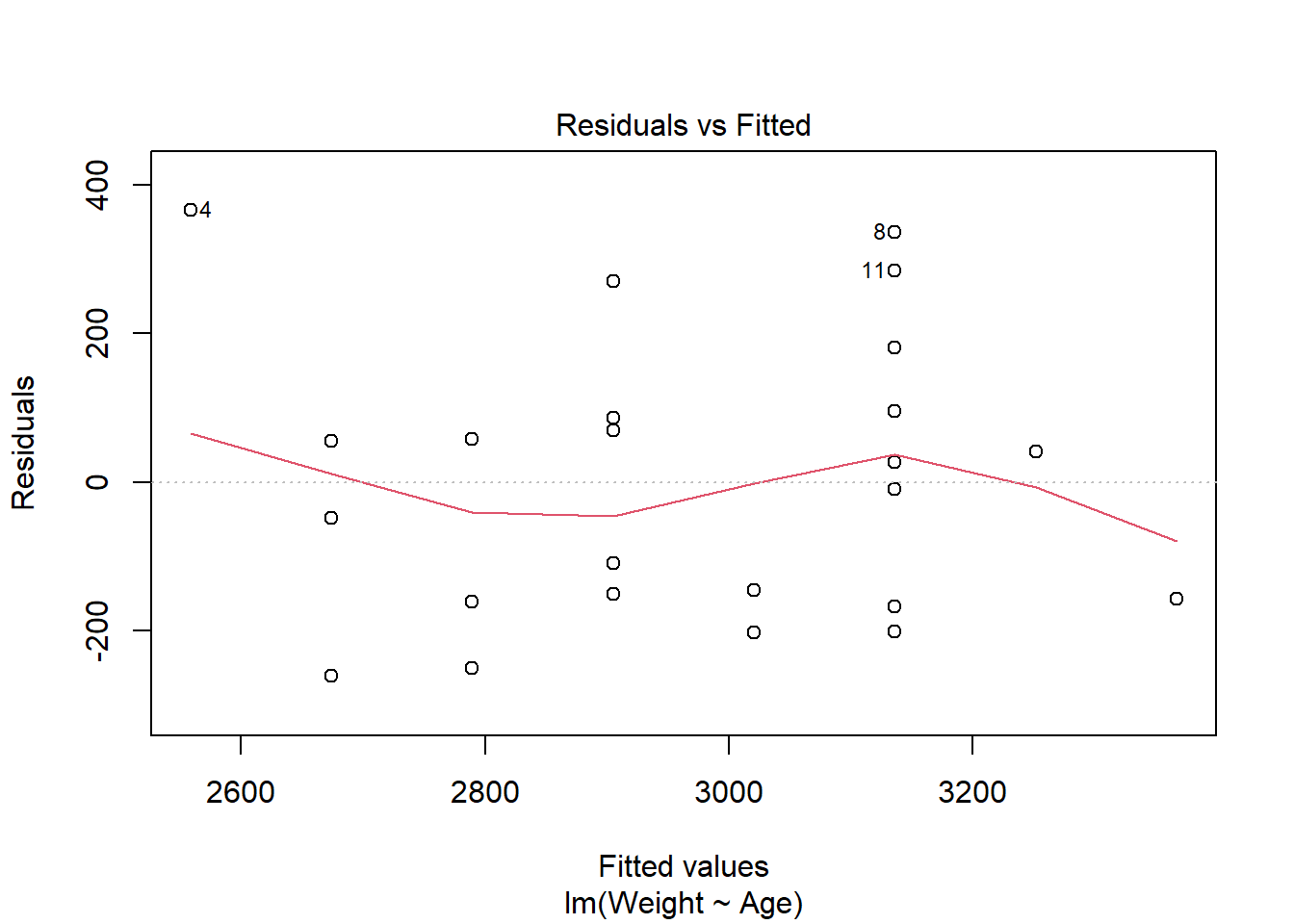

To create diagnostic plots for the linear model to check the required assumptions, the function plot() can be used with the model as the argument. This creates four plots, each having a different purpose. To produce only one of the four plots, add an argument for the plot index you wish to show (the fourth plot is combined of Cook’s distance and Residuals vs Leverage plots, to index the fourth plot, include 5 as an argument).

The first plot is the Residuals vs Fitted plot, used to check the linearity assumption.

In this plot, there should be no pattern and the red line should be approximately horizontal at zero for the normality assumption to hold. The residuals plot for the birth data is quite horizontal and is based around zero, however, there is a slight indication of a pattern meaning that there could be some problem with the linear model. This problem could be many things, possibly indicating that the relationship is not linear and instead quadratic for example.

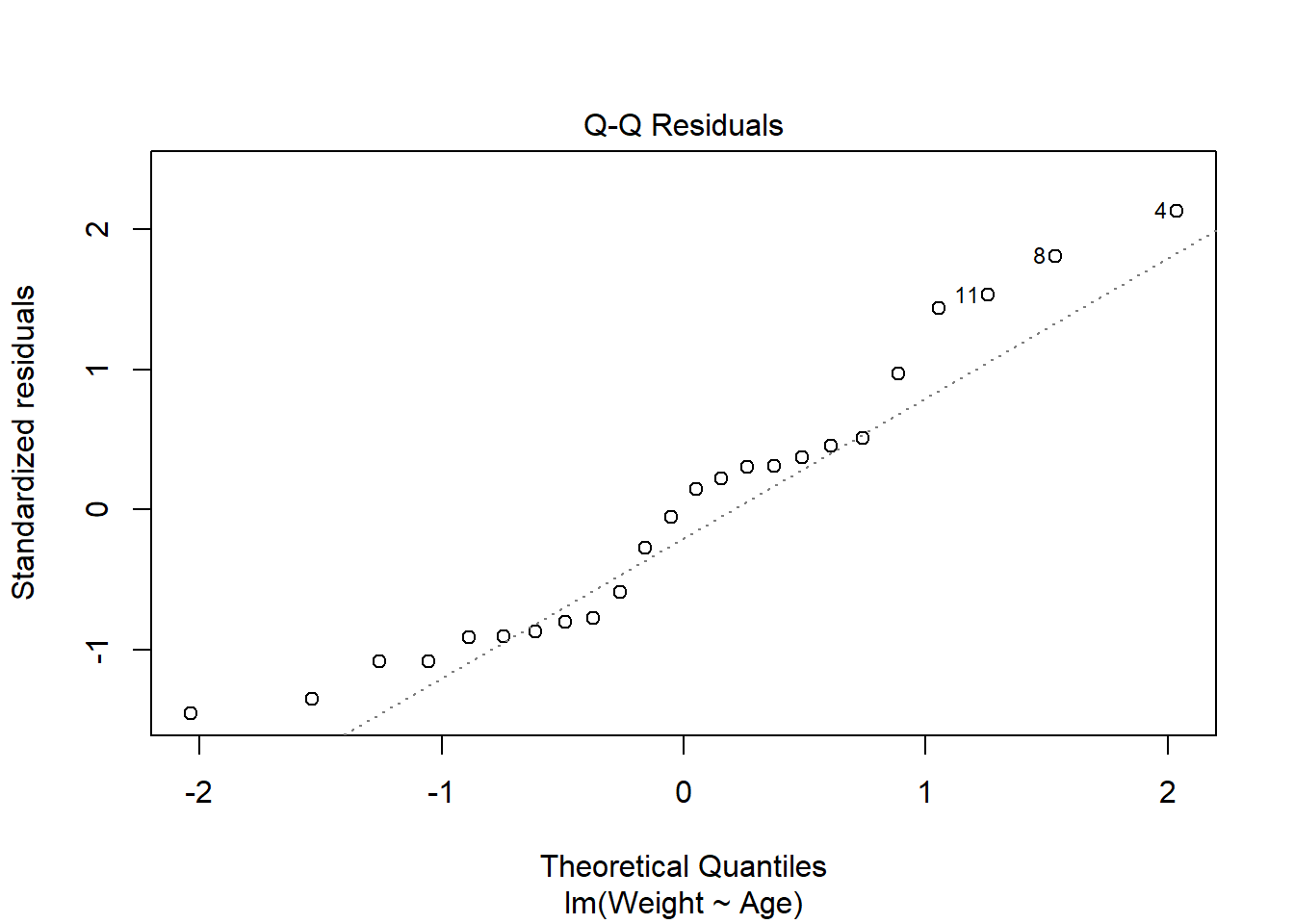

The second plot is the Normal Q-Q plot, similar to that produced by the qqnorm() function discussed previously, but now is used to check the normality assumption of the residuals.

The plot for the birth data does show some problems with the normality assumption given that the points to not all approximately fall on the reference line. This means that the normality assumption cannot be assumed.

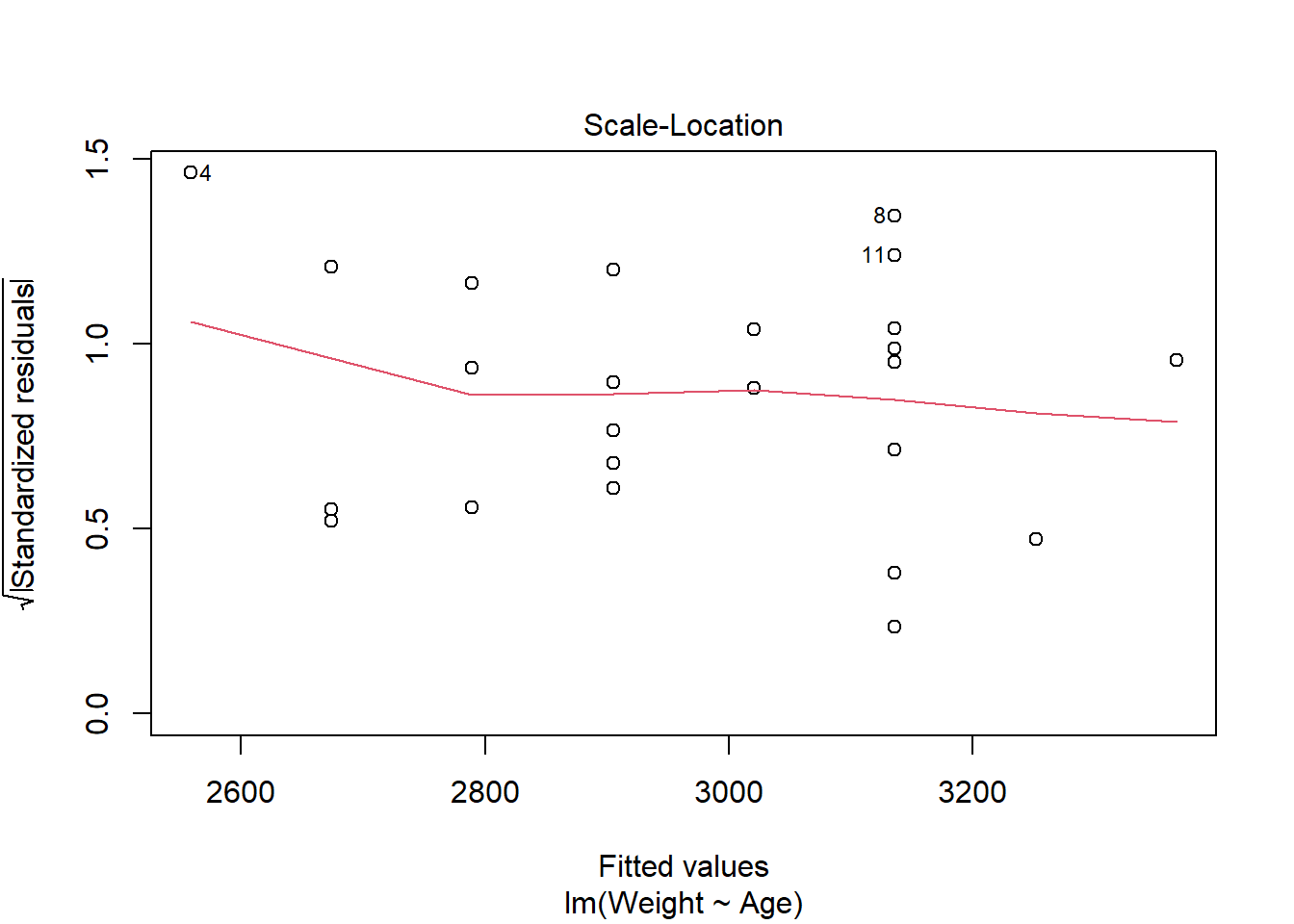

The third plot is the Scale-Location plot, or the Spread-Location plot, used to verify the homoscedasticity (homogeneity of variance). For the assumption to be upheld, the line should be approximately horizontal with the points equally dispersed.

In this case, the points are approximately equally spread out with the reference line being mostly horizontal, indicating that the homoscedasticity assumption may be upheld.

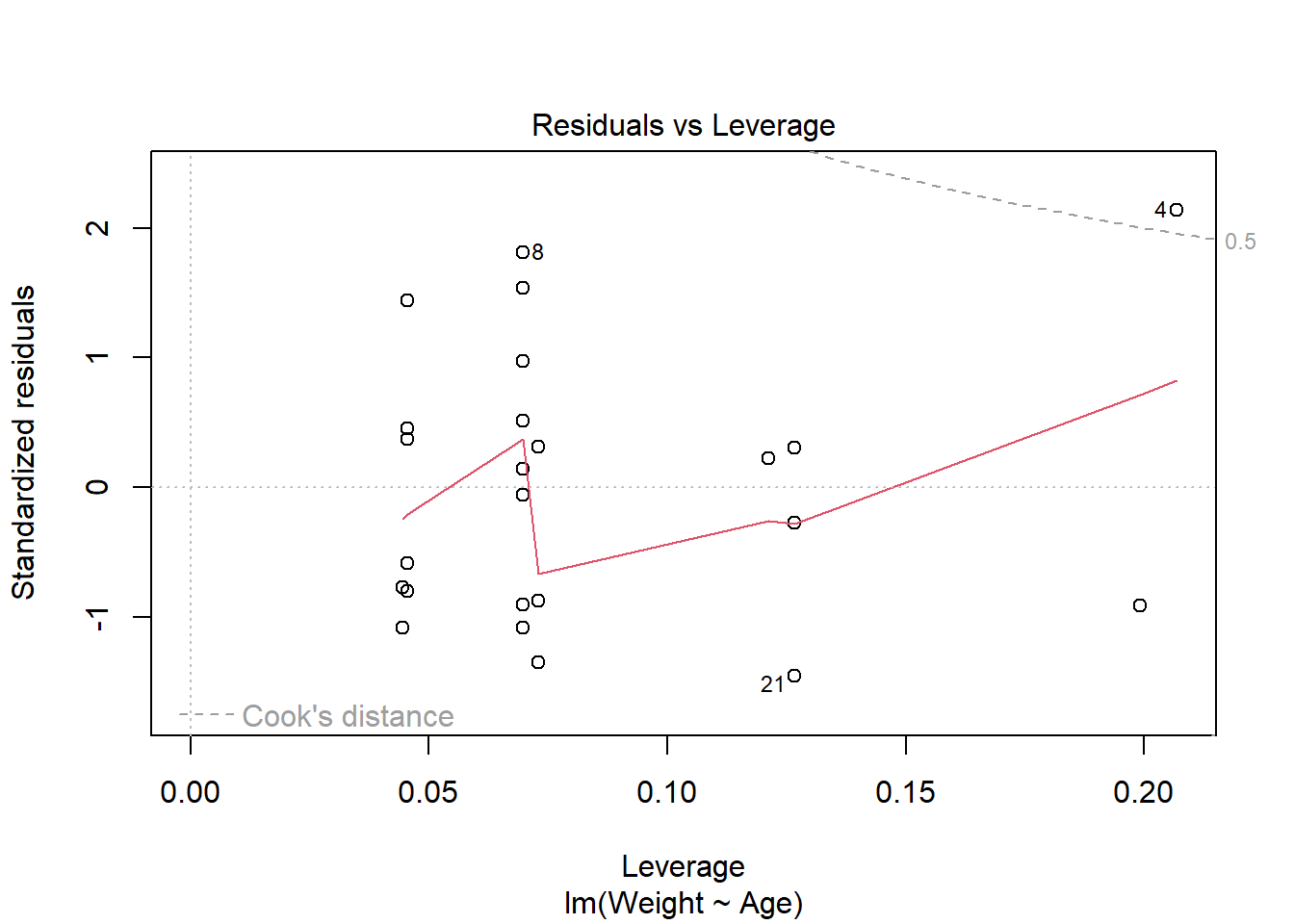

Finally, the fourth plot is the Residuals vs Leverage plot, used for identifying outlier points that have high leverage. These are points that may impact the results of the regression analysis if they are included or excluded, although not all outliers are influential to alter the results. This plot identifies the 3 most extreme values.

This plot identifies the 3 most extreme points (#4, #8 and #21), where point number 4 is identified as influential through using the measure of Cook’s distance. There is evidence that this point will alter the results of the regression analysis so there should be some consideration whether to include this point or not.

4.2.2 Polynomial regression

The data is not always best described by a linear relationship between the dependent and independent variables, for example, there could be a quadratic relationship between the variables.

The following are examples of polynomial regression models:

- A quadratic function: \[ Y_i = \beta_0 + \beta_1 x_i + \beta_2 x_i^2 + \epsilon_i.\]

- A polynomial of degree 4: \[Y_i = \beta_0 + \beta_1 x_i + \beta_2 x_i^2 + \beta_3 x_i^3 + \beta_4 x_i^4+ \epsilon_i.\]

For example, to fit a simple polynomial regression model with a quadratic function to the birth weight dataset used above, the quadratic term needs to be created and added to the data, then the function lm() can be used inputting the model formula. Alternatively, the quadratic term can be included in the formula within the function I() which lets R know to include that term as a separate term within the model. Another option is to use the function poly() with arguments for the independent variable and the degree of polynomial wanted, making the code more efficient when higher order polynomials are used in particular, instead of typing out a long equation with many terms.

#create the quadratic term and add to data before modelling

birth$Age2 <- birth$Age^2

birth_quad_lm1 <- lm(Weight ~ Age + Age2, data = birth)

summary(birth_quad_lm1)##

## Call:

## lm(formula = Weight ~ Age + Age2, data = birth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -276.86 -156.62 5.87 99.72 340.27

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7288.668 17631.783 0.413 0.684

## Age -342.639 919.905 -0.372 0.713

## Age2 5.969 11.980 0.498 0.624

##

## Residual standard error: 196 on 21 degrees of freedom

## Multiple R-squared: 0.5592, Adjusted R-squared: 0.5173

## F-statistic: 13.32 on 2 and 21 DF, p-value: 0.0001837#alternatively, include quadratic term within I()

birth_quad_lm2 <- lm(Weight ~ Age + I(Age^2), data = birth)

summary(birth_quad_lm2) #produces the same model##

## Call:

## lm(formula = Weight ~ Age + I(Age^2), data = birth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -276.86 -156.62 5.87 99.72 340.27

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7288.668 17631.783 0.413 0.684

## Age -342.639 919.905 -0.372 0.713

## I(Age^2) 5.969 11.980 0.498 0.624

##

## Residual standard error: 196 on 21 degrees of freedom

## Multiple R-squared: 0.5592, Adjusted R-squared: 0.5173

## F-statistic: 13.32 on 2 and 21 DF, p-value: 0.0001837#alternatively, include quadratic term within poly()

birth_quad_lm3 <- lm(Weight ~ poly(x = Age, degree = 2, raw = TRUE),

data = birth)

summary(birth_quad_lm3) #produces the same model##

## Call:

## lm(formula = Weight ~ poly(x = Age, degree = 2, raw = TRUE),

## data = birth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -276.86 -156.62 5.87 99.72 340.27

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7288.668 17631.783 0.413 0.684

## poly(x = Age, degree = 2, raw = TRUE)1 -342.639 919.905 -0.372 0.713

## poly(x = Age, degree = 2, raw = TRUE)2 5.969 11.980 0.498 0.624

##

## Residual standard error: 196 on 21 degrees of freedom

## Multiple R-squared: 0.5592, Adjusted R-squared: 0.5173

## F-statistic: 13.32 on 2 and 21 DF, p-value: 0.0001837The output from the summary() function works the same for non-linear models as it does for linear, with the coefficient estimates corresponding to the values of \(\alpha\) for the intercept and the \(\beta\) value(s) for the covariate(s). In this case, it can be seen that adding the quadratic term for age does not improve upon the linear model given that the p-value (Pr(>|t|)) is not statistically significant. This conclusion is reasonable given that the line of best fit for the linear model fits the birth weight data well and the data does not show a quadratic trend.

The welding dataset below contains information from the Welding Institute in Abingdon, providing \(n=21\) measurements of currents in amps with the corresponding minimum diameter of the weld. Given that the diameter of the weld depends on the amount of current, Current is the independent variable and Diameter is the dependent variable.

#create welding dataset

welding <- data.frame(Current = c(7.82, 8.00, 7.95, 8.07, 8.08, 8.01, 8.33,

8.34, 8.32, 8.64, 8.61, 8.57, 9.01, 8.97,

9.05, 9.23, 9.24, 9.24, 9.61, 9.60, 9.61),

Diameter = c(3.4, 3.5, 3.3, 3.9, 3.9, 4.1, 4.6, 4.3, 4.5,

4.9, 4.9, 5.1, 5.5, 5.5, 5.6, 5.9, 5.8, 6.1,

6.3, 6.4, 6.2))The welding data can be modelled in the same way as the birth weight dataset, using the function lm(), as seen in the example below.

#simple linear model

weld_simple_lm <- lm(Diameter ~ Current, data = welding)

summary(weld_simple_lm)##

## Call:

## lm(formula = Diameter ~ Current, data = welding)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.42623 -0.07282 0.01637 0.08269 0.34586

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -9.45427 0.65526 -14.43 1.09e-11 ***

## Current 1.65793 0.07531 22.01 5.53e-15 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.2012 on 19 degrees of freedom

## Multiple R-squared: 0.9623, Adjusted R-squared: 0.9603

## F-statistic: 484.6 on 1 and 19 DF, p-value: 5.529e-15#quadratic model

weld_quad_lm <- lm(Diameter ~ Current + I(Current^2), data = welding)

summary(weld_quad_lm)##

## Call:

## lm(formula = Diameter ~ Current + I(Current^2), data = welding)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.31023 -0.10023 -0.00496 0.09880 0.35197

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -41.5662 9.9392 -4.182 0.000560 ***

## Current 9.0430 2.2833 3.960 0.000917 ***

## I(Current^2) -0.4227 0.1306 -3.236 0.004589 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.1644 on 18 degrees of freedom

## Multiple R-squared: 0.9761, Adjusted R-squared: 0.9735

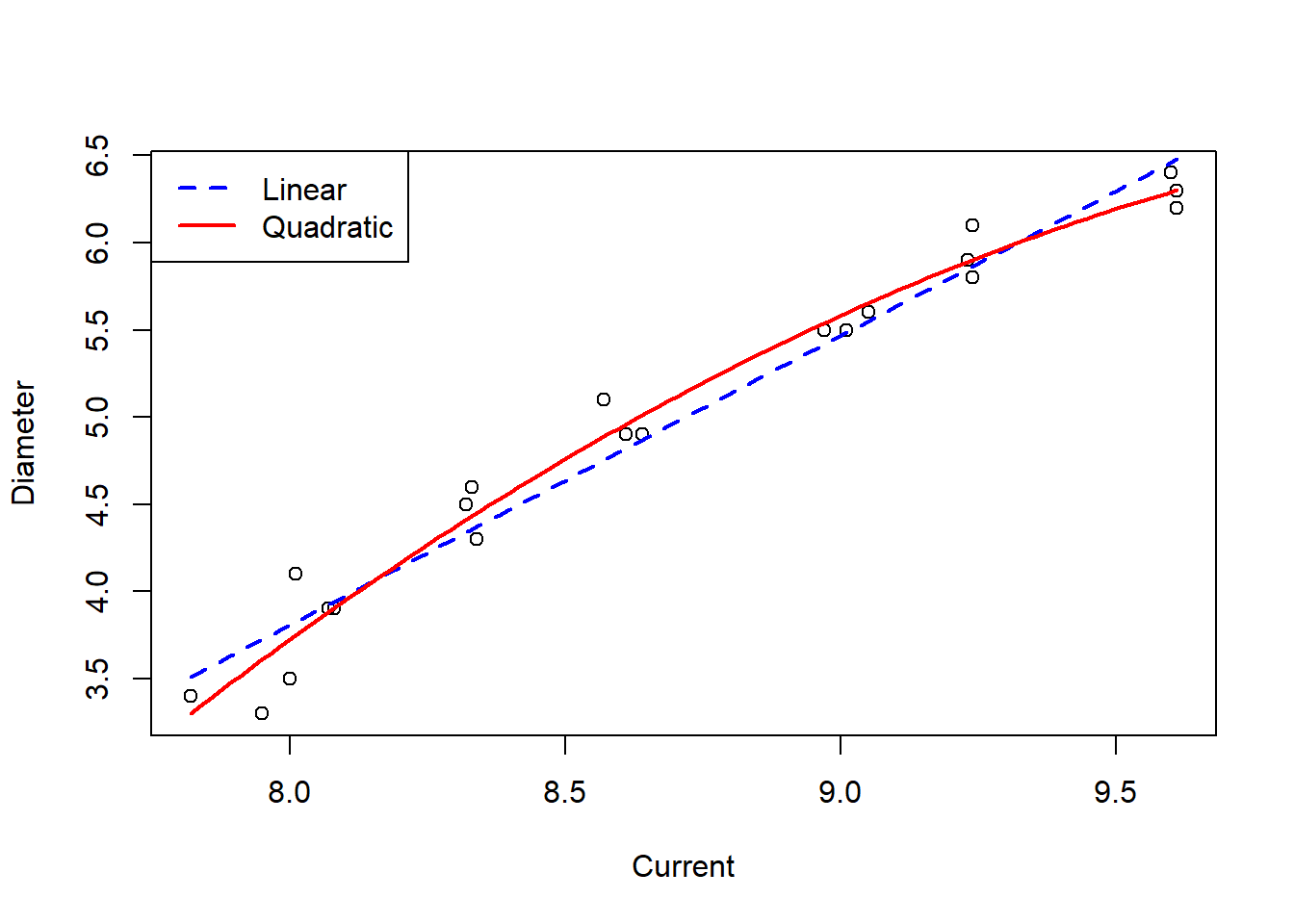

## F-statistic: 368.3 on 2 and 18 DF, p-value: 2.501e-15Unlike with the birth weight quadratic model, the addition of a quadratic term to the model for the welding data improves the fit of the model, given that both the linear and quadratic terms are statistically significant at the 5% significance level. This improved fit can be demonstrated graphically using the function predict() with arguments for the model you wish to predict from and a new dataset with a range of values you wish to predict the values of the dependent variable from (typically a sequence of evenly spaced numbers from the minimum to maximum values of your independent variable).

#made a new dataset

weld.new <- data.frame(Current = seq(from = min(welding$Current),

to = max(welding$Current),

length.out = 100))

#use the predict function

pred_simple_lm <- predict(weld_simple_lm, newdata = weld.new)

pred_quad_lm <- predict(weld_quad_lm, newdata = weld.new)

#basic plot for relationship between variables

plot(Diameter ~ Current, data = welding)

#add lines for each of the sets of predicted values

lines(pred_simple_lm ~ weld.new$Current, col = "blue", lty = 2, lwd = 2)

lines(pred_quad_lm ~ weld.new$Current, col = "red", lty = 1, lwd = 2)

#add a legend for clarity

legend("topleft", c("Linear", "Quadratic"),

col = c("blue", "red"), lty = c(2,1), lwd = 2)

The plot showing lines of best fit for both the simple linear model and the quadratic model demonstrate the improved fit of the quadratic model, with the added flexibility of the curve matching the trend of the data better.

This process can be extended for including higher degrees of polynomials in the regression models, although it is important to be wary of overfitting the model to the data as this risks the model only having use for inference to the original dataset.

4.2.3 Non-linear regression

The relationships being explored are not always best described by a linear relationship. In these cases, the data can be transformed, for example using logarithms, square roots and exponentials, to fit a non-linear model which is more flexible, potentially explaining the relationship between variables better. For a regression model to be non-linear, \(Y\) must be a non-linear function of the parameters (e.g. \(\beta_0\) and \(\beta_1\)), however, \(Y\) can still be a linear function of the covariates \(x\).

The following are examples of non-linear regression models:

- Squared value of the \(\beta\) coefficient: \[Y_i = \beta_0 + \beta_1^2x_i + \epsilon_i.\]

- Logarithmic: \[\log(Y_i) = \beta_0 + \beta_1 x_i + \epsilon_i\] which implies \[Y_i = \exp(\beta_0 + \beta_1 x_i + \epsilon_i)=\exp(\beta_0)\exp(\beta_1 x_i)\exp(\epsilon)\] a relationship which is multiplicative, meaning that a unit increase in \(x_i\) corresponds to \(Y_i\) being multiplied by a value of \(\exp(\beta x_i)\), instead of an additive effect of \(\beta x_i\) like with a linear model.

- Square root: \[Y_i^{1/2}=\beta_0 + \beta_1 x_i + \epsilon_i.\]

- Negative reciprocal: \[-\frac{1}{Y_i}= \beta_0 + \beta_1 x_i + \epsilon_i.\]

The function nls() can be used for non-linear regression models and estimate the parameters via a non-linear least squares approach (a non-linear approach to finding the line of best fit for the given data). To demonstrate this approach, the Michaelis-Menten equation for kinetics given below can be used, given that there is a non-linear relationship between the dependent variable and the parameters.

\[ Y_i = \frac{\beta_0 x_i}{\beta_1+x_i} \]

#simulate some data

set.seed(100)

x<-1:100

y<-((runif(1,20,30)*x)/(runif(1,0,20)+x)) + rnorm(100,0,1)

#model the data using the function nls(), if no start values are given, a

#warning may occur, but R will just choose the start values itself instead

nonlinear_mod <- nls(y ~ a*x/(b+x)) ## Warning in nls(y ~ a * x/(b + x)): No starting values specified for some parameters.

## Initializing 'a', 'b' to '1.'.

## Consider specifying 'start' or using a selfStart model##

## Formula: y ~ a * x/(b + x)

##

## Parameters:

## Estimate Std. Error t value Pr(>|t|)

## a 22.9682 0.1928 119.11 <2e-16 ***

## b 4.9492 0.3010 16.44 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.023 on 98 degrees of freedom

##

## Number of iterations to convergence: 6

## Achieved convergence tolerance: 2.029e-07The summary function works in the same way as for the linear models, providing the estimated values of the model parameters, in this case, \(\beta_0=6.4946\) and \(\beta_1 =1.0765\).

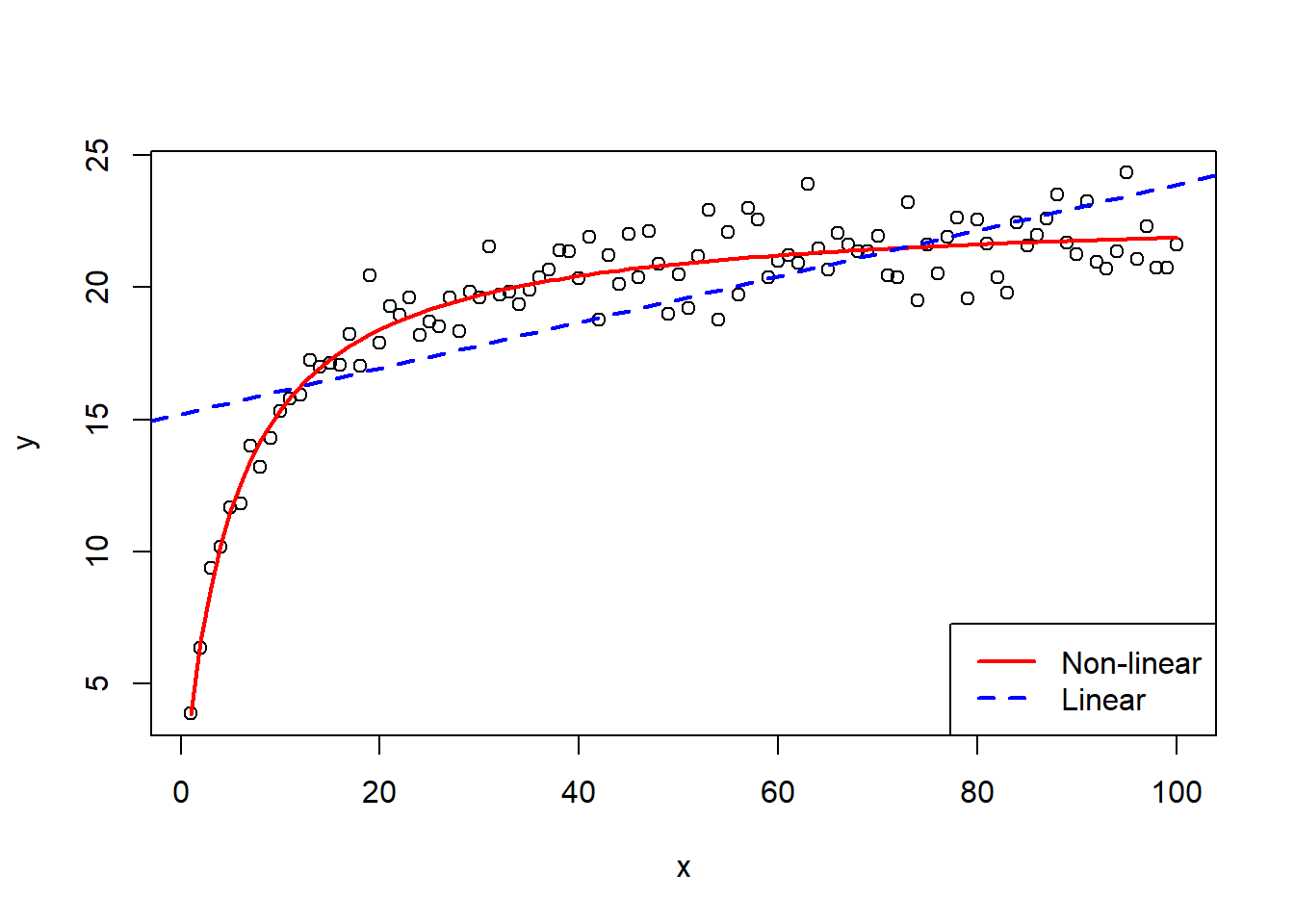

To visualise this equation with the non-linear regression model fitted, the function plot() can be used as with the linear models, with the addition of the function lines() as used with the polynomial regression models with an argument for the x-axis values and the predicted values.

#plot the data

plot(x,y)

#add a line of best fit

lines(x, predict(nonlinear_mod), col = "red", lty = 1, lwd = 2)

#add a linear regression line for comparison purposes

abline(lm(y ~ x), col = "blue", lty = 2, lwd = 2)

#add a legend for clarity

legend("bottomright", c("Non-linear", "Linear"),

col = c("red", "blue"), lty = c(1,2), lwd = 2)

It can be seen in the plot that the non-linear line fits the data very well, much better than the simple linear regression model added to the plot for comparison purposes.

4.3 Multiple regression

Multiple regression can be described as an extension of simple regression where you still only have one dependent variable but there are multiple independent variables. For \(p\) independent variables, the model can be written as

\[ Y_i = \beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2} + ... + \beta_p x_{ip} + \epsilon_i,\] where \(\epsilon_i \sim N(0, \sigma^2)\) for \(i=1,...,n\).

An important assumption of multiple regression modelling is multicollinearity, meaning that it is assumed that the independent variables are not highly correlated with one another. If this assumption is not met, it can make identifying which variables better explain the dependent variable better much more challenging.

The birth dataset can be used to demonstrate multiple linear regression given that there are 2 independent variables included in the data, Sex and Age, modelled as follows.

\[ Weight_i =\beta_0 + \beta_1 Sex_i + \beta_2 Age_i + \epsilon_i\]

To perform multiple linear regression in R, the function lm() can be used in the same way as for simple linear regression, however, with the additional variables given in the formula as in the code below.

#multiple linear regression

birth_multi_lm1 <- lm(Weight ~ Sex + Age, data = birth)

summary(birth_multi_lm1)##

## Call:

## lm(formula = Weight ~ Sex + Age, data = birth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -257.49 -125.28 -58.44 169.00 303.98

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1447.24 784.26 -1.845 0.0791 .

## Sex -163.04 72.81 -2.239 0.0361 *

## Age 120.89 20.46 5.908 7.28e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 177.1 on 21 degrees of freedom

## Multiple R-squared: 0.64, Adjusted R-squared: 0.6057

## F-statistic: 18.67 on 2 and 21 DF, p-value: 2.194e-05The output from the summary function shows that both independent variables are statistically significant at the 5% significance level, and hence birth weight depends on both sex and gestational age of the baby. Interpreting the results is done in the same way as for simple models, with the estimates corresponding to the coefficients as follows:

- \(\alpha\)=-1447.24

- \(\beta_1\)=-163.04

- \(\beta_2\)=120.89

Through using the function update(), you can add or remove variables from a model without needing to re-fit the model yourself. This is particularly useful when you have a model with many parameters, where instead of needing to type out the model again with each of the parameters, you can simply update the existing model to either add another parameter or remove a parameter if it is not needed.

To remove a variable from a model, you use the function in the form update(model, ~. - term). For example, to update the model given above to remove the covariate Age, you would use the below code.

#remove the Age covariate from the multiple linear regression model

birth_multi_lm2 <- update(birth_multi_lm1, ~. - Age)

birth_multi_lm2##

## Call:

## lm(formula = Weight ~ Sex, data = birth)

##

## Coefficients:

## (Intercept) Sex

## 3136.7 -112.7Alternatively, if you wish to add a variable, you use the formula in the form update(model, ~. + term). For example, to add the term for Age back into the model, you would use the below code, which results in the same model as originally fitted.

#remove the Age covariate from the multiple linear regression model

update(birth_multi_lm2, ~. + Age)##

## Call:

## lm(formula = Weight ~ Sex + Age, data = birth)

##

## Coefficients:

## (Intercept) Sex Age

## -1447.2 -163.0 120.9The update() function also allows for the data being modelled to be updated through adding an argument for data =. This is demonstrated in the code below.

#create an example dataset

y <- c(1:20)

x1 <- y^2

z1 <- y*3

update_example1 <- data.frame(x = x1, y = y, z = z1)

#fit linear model

example_mod1 <- lm(y ~ x + z, data = update_example1)

summary(example_mod1)## Warning in summary.lm(example_mod1): essentially perfect fit: summary may be unreliable##

## Call:

## lm(formula = y ~ x + z, data = update_example1)

##

## Residuals:

## Min 1Q Median 3Q Max

## -4.774e-15 -4.318e-16 4.340e-17 5.935e-16 3.439e-15

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.046e-15 1.160e-15 -3.488e+00 0.00282 **

## x -3.823e-17 1.177e-17 -3.249e+00 0.00472 **

## z 3.333e-01 8.480e-17 3.931e+15 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.559e-15 on 17 degrees of freedom

## Multiple R-squared: 1, Adjusted R-squared: 1

## F-statistic: 1.368e+32 on 2 and 17 DF, p-value: < 2.2e-16#create new dataset

x2 <- y^3

z2 <- y*4

update_example2 <- data.frame(x = x2, y = y, z = z2)

#update the dataset in the model

example_mod2 <- update(example_mod1, data = update_example2)

summary(example_mod2)## Warning in summary.lm(example_mod2): essentially perfect fit: summary may be unreliable##

## Call:

## lm(formula = y ~ x + z, data = update_example2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.681e-16 -1.410e-18 3.702e-17 6.826e-17 1.084e-16

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.000e+00 7.773e-17 0.000e+00 1

## x 0.000e+00 2.849e-20 0.000e+00 1

## z 2.500e-01 3.016e-18 8.289e+16 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.204e-16 on 17 degrees of freedom

## Multiple R-squared: 1, Adjusted R-squared: 1

## F-statistic: 2.293e+34 on 2 and 17 DF, p-value: < 2.2e-164.4 Generalised linear regression

For simple regression modelling, there is the assumption of normality for the dependent variable, however, this assumption is not always met, for example, with count data (e.g. number of people with a disease) which is often modelled with a Poisson distribution, or binary data (e.g. beetles killed or not killed) which is often modelled with a Bernoulli distribution. In these cases of non-normal data, alternative models are required, which is where generalised linear modelling is beneficial with its relaxed distributional assumption.

The generalised linear model is written in the form \[g(\mu_i) = \eta_i = \boldsymbol{x}_i^T \boldsymbol{\beta},\] where \(\mu_i=E(Y_i)\) is the expected value of \(Y_i\), \(\eta_i\) is the linear predictor and \(g(\mu_i)\) is the link function between the distribution of \(\boldsymbol{Y}\) and the linear predictor.

An important assumption for generalised linear regression is that the dependent variable \(\boldsymbol{Y}\) is assumed to be independent and a member of the exponential family (e.g. normal, Poisson, Bernoulli, geometric, exponential, …).

The link function depends on the distribution of the data type and the dependent variable, where the table below provides the three main link functions and the corresponding data types and distributions.

| Data type | Response family | Link | Name |

|---|---|---|---|

| Continuous | Normal/Gaussian/log-normal/gamma | \(g(\mu)=\mu\) | Identity |

| Count | Poisson | \(g(\mu)=\log(\mu)\) | Log |

| Binary | Bernoulli/binomial | \(g(\mu)=\log\left(\frac{p}{1-p}\right)\) | Logit |

To fit generalised linear models in R, the function glm() can be used, in a very similar way to the function lm() seen in earlier sections, but with the addition of a (exponential) family argument. The default family is normal, hence why if no family is specified, the functions glm() and lm() produce identical models. However, if the data is not normal, the exponential family that the data is a member of must be specified.

When dealing with count data, the Poisson log-linear model is most commonly used, taking the following form. \[ Y_i \sim Poisson(\mu_i), \text{ } \log(\mu_i)=\boldsymbol{x}_i^T \boldsymbol{\beta}\] It is important to note that the Poisson distribution assumes that the mean and variance are equal. If over-dispersion (the variance is greater than the mean) is present, a negative-binomial model may be preferable.

To demonstrate generalised linear modelling with count data, the ccancer dataset from the package GLMsData can be utilised. This dataset gives the count of deaths (Count) due to cancer within three different regions of Canada (Region), providing additional covariates for the gender of each individual (Gender) and the site of the cancer (Site). More information on this dataset can be found through using the help function.

## Count Gender Region Site Population

## 1 3500 M Ontario Lung 11874400

## 2 1250 M Ontario Colorectal 11874400

## 3 0 M Ontario Breast 11874400

## 4 1600 M Ontario Prostate 11874400

## 5 540 M Ontario Pancreas 11874400

## 6 2400 F Ontario Lung 11874400To model this data, the function glm() can be used again, but with specifying the family argument as family = poisson as follows, where the following model is an intercept only model, including a 1 instead of any independent variables.

#fit the glm for the ccancer data to explore the effect of gender on the

#count of cancer deaths

ccancer_glm1 <- glm(Count ~ 1, family = "poisson", data = ccancer)

summary(ccancer_glm1)##

## Call:

## glm(formula = Count ~ 1, family = "poisson", data = ccancer)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 6.702984 0.006396 1048 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 35187 on 29 degrees of freedom

## Residual deviance: 35187 on 29 degrees of freedom

## AIC: 35380

##

## Number of Fisher Scoring iterations: 6Given that there are covariates included in this dataset, it is important to explore the relationship they may have with the response. The following model contains a main effect for gender, exploring the relationship between the gender of individuals and cancer deaths.

#fit the glm for the ccancer data to explore the effect of gender on the

#count of cancer deaths

ccancer_glm2 <- glm(Count ~ Gender, family = "poisson", data = ccancer)

summary(ccancer_glm2)##

## Call:

## glm(formula = Count ~ Gender, family = "poisson", data = ccancer)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 6.621406 0.009422 702.77 <2e-16 ***

## GenderM 0.157000 0.012831 12.24 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 35187 on 29 degrees of freedom

## Residual deviance: 35037 on 28 degrees of freedom

## AIC: 35232

##

## Number of Fisher Scoring iterations: 6It can be seen in this model that the inclusion of the covariate Gender is statistically significant, therefore there is a relationship between the gender of an individual and the count of cancer deaths in Canada.

Exercise: Fit a Poisson GLM using the ccancer dataset exploring the relationship between the count of cancer deaths and the covariates for the cancer site and region in Canada. Is the relationship between the dependent and independent variables significant?

Another example of generalised linear modelling with count data can be seen as follows using the hodgkins dataset which contains information on 583 patients with Hodgkin’s disease. Within this information is the number of patients (count) with each combination of the histological type of disease (type) and the response to the treatment (rtreat).

hodgkins <- data.frame(count = c(74, 18, 12, 68, 16, 12, 154, 54, 58,

18, 10, 44),

type = c("Lp", "Lp", "Lp", "Ns", "Ns", "Ns", "Mc",

"Mc", "Mc", "Ld", "Ld", "Ld"),

rtreat = c("positive", "partial", "none", "positive",

"partial", "none", "positive", "partial",

"none", "positive", "partial", "none"))The information on the patients has been cross-classified, where the covariates type and rtreat are categorical variables with multiple levels each.

#fit a glm to the hodgkins dataset including both covariates in the model

hodgkins_glm1 <- glm(count ~ type + rtreat, family = poisson, data = hodgkins)

summary(hodgkins_glm1)##

## Call:

## glm(formula = count ~ type + rtreat, family = poisson, data = hodgkins)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 2.8251 0.1413 19.993 <2e-16 ***

## typeLp 0.3677 0.1533 2.399 0.0165 *

## typeMc 1.3068 0.1328 9.837 <2e-16 ***

## typeNs 0.2877 0.1559 1.845 0.0650 .

## rtreatpartial -0.2513 0.1347 -1.866 0.0621 .

## rtreatpositive 0.9131 0.1055 8.659 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 367.247 on 11 degrees of freedom

## Residual deviance: 68.295 on 6 degrees of freedom

## AIC: 143.66

##

## Number of Fisher Scoring iterations: 5Alternatively, a generalised linear model can be fitted with both the main effects and an interaction. This model is known as the full or saturated model, where the interaction term can be included in addition to the main effects using a colon : between the variables you wish to include an interaction term for. This method is beneficial for when you just want to include an interaction, not necessarily the corresponding main effects, however, if you want to include both the interaction and corresponding main effects to a model, an asterisk * can be used between the chosen covariates. Both these methods are demonstrated below and return the same model.

#fit the saturated Poisson GLM to the hodgkins dataset with a colon

hodgkins_glm2 <- glm(count ~ type + rtreat + type:rtreat, family = poisson,

data = hodgkins)

summary(hodgkins_glm2)##

## Call:

## glm(formula = count ~ type + rtreat + type:rtreat, family = poisson,

## data = hodgkins)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 3.7842 0.1508 25.101 < 2e-16 ***

## typeLp -1.2993 0.3257 -3.990 6.62e-05 ***

## typeMc 0.2763 0.1999 1.382 0.167031

## typeNs -1.2993 0.3257 -3.990 6.62e-05 ***

## rtreatpartial -1.4816 0.3503 -4.229 2.34e-05 ***

## rtreatpositive -0.8938 0.2798 -3.195 0.001400 **

## typeLp:rtreatpartial 1.8871 0.5115 3.689 0.000225 ***

## typeMc:rtreatpartial 1.4101 0.3981 3.542 0.000397 ***

## typeNs:rtreatpartial 1.7693 0.5182 3.414 0.000640 ***

## typeLp:rtreatpositive 2.7130 0.4185 6.483 9.00e-11 ***

## typeMc:rtreatpositive 1.8703 0.3194 5.856 4.75e-09 ***

## typeNs:rtreatpositive 2.6284 0.4199 6.260 3.86e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 3.6725e+02 on 11 degrees of freedom

## Residual deviance: 1.9540e-14 on 0 degrees of freedom

## AIC: 87.363

##

## Number of Fisher Scoring iterations: 3#fit the saturated Poisson GLM to the hodgkins dataset with an asterisk

hodgkins_glm3 <- glm(count ~ type*rtreat, family = poisson, data = hodgkins)

summary(hodgkins_glm3)##

## Call:

## glm(formula = count ~ type * rtreat, family = poisson, data = hodgkins)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 3.7842 0.1508 25.101 < 2e-16 ***

## typeLp -1.2993 0.3257 -3.990 6.62e-05 ***

## typeMc 0.2763 0.1999 1.382 0.167031

## typeNs -1.2993 0.3257 -3.990 6.62e-05 ***

## rtreatpartial -1.4816 0.3503 -4.229 2.34e-05 ***

## rtreatpositive -0.8938 0.2798 -3.195 0.001400 **

## typeLp:rtreatpartial 1.8871 0.5115 3.689 0.000225 ***

## typeMc:rtreatpartial 1.4101 0.3981 3.542 0.000397 ***

## typeNs:rtreatpartial 1.7693 0.5182 3.414 0.000640 ***

## typeLp:rtreatpositive 2.7130 0.4185 6.483 9.00e-11 ***

## typeMc:rtreatpositive 1.8703 0.3194 5.856 4.75e-09 ***

## typeNs:rtreatpositive 2.6284 0.4199 6.260 3.86e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 3.6725e+02 on 11 degrees of freedom

## Residual deviance: 1.9540e-14 on 0 degrees of freedom

## AIC: 87.363

##

## Number of Fisher Scoring iterations: 3It can be seen from looking at the results from both the main effects model and the saturated model, particularly the p-values, that the terms in the saturated model are more statistically significant, indicating that the saturated model is a better fit for the data.

Additionally, through testing whether the interaction term is needed, you are able to test whether the covariates are independent from one another or whether they are correlated/associated. In this case, the inclusion of the interaction term improves the model and all interaction terms are statistically significant, therefore there is evidence that the covariates type and rtreat are independent from one another.

If the data is binary, meaning that there are only two possible outcomes, the family can be specified as family = binomial to fit a binomial logistic regression model with a logit link, the default link for a binomial family which assumes that the errors follow a logistic distribution. Alternatively, a probit link can be used, through changing the family argument to be family = binomial(link="probit"), which instead assumes that the errors follow a normal distribution, however, this is used less frequently.

The form of a binomial logistic regression model is given as \[Y_i|n_i, p_i \sim Binomial(n_i, p_i), \text{ logit}(p_i)=\log\left(\frac{p_i}{1-p_i}\right).\]

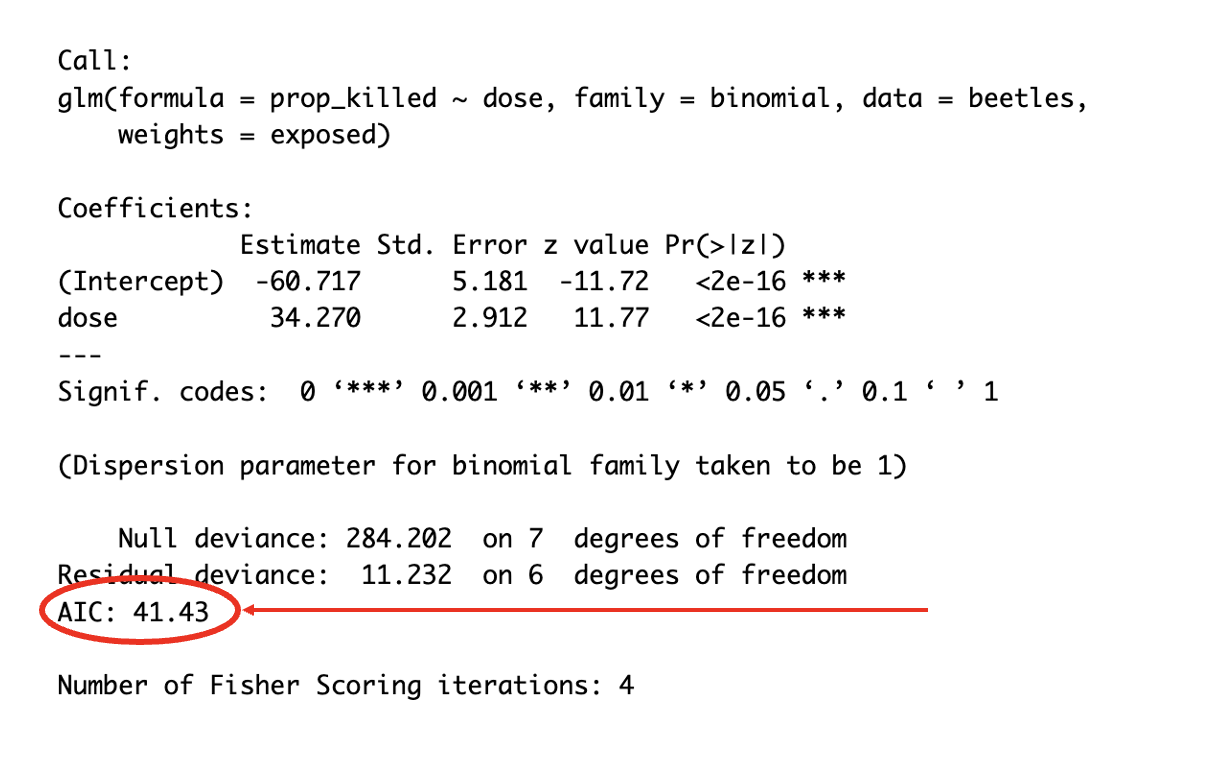

This can be seen in the code below using the beetles dataset, \(Y_i\) is the number of beetles killed, \(n_i\) is the number of beetles exposed and \(\boldsymbol{x}_i\) is the dose.

beetles <- data.frame(dose = c(1.6907, 1.7242, 1.7552, 1.7842, 1.8113, 1.8369,

1.861, 1.8839),

exposed = c(59, 60, 62, 56, 63, 59, 62, 60),

killed = c(6, 13, 18, 28, 52, 53, 61, 60))There are two ways to fit the binomial logistic regression model. Firstly, is to model the proportion of “successes” (in this example, it is the proportion of beetles killed) and weight by the number of trials (in this example, it is the number of beetles exposed).

#compute the proportion of beetles killed

beetles$prop_killed <- beetles$killed / beetles$exposed

#fit a binomial logistic regression model

beetles_glm_props <- glm(prop_killed ~ dose, data = beetles, family = binomial,

weights = exposed)

summary(beetles_glm_props)##

## Call:

## glm(formula = prop_killed ~ dose, family = binomial, data = beetles,

## weights = exposed)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -60.717 5.181 -11.72 <2e-16 ***

## dose 34.270 2.912 11.77 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 284.202 on 7 degrees of freedom

## Residual deviance: 11.232 on 6 degrees of freedom

## AIC: 41.43

##

## Number of Fisher Scoring iterations: 4Alternatively, the independent variable can be given as a matrix with two columns, one for the number of “successes” and the other for “failures” (in this example, a success is a beetle killed and a failure is a beetle not killed).

#fit a binomial logistic regression model with two columns to response

beetles_glm_matrix <- glm(cbind(killed, exposed - killed) ~ dose,

data = beetles, family = binomial)

summary(beetles_glm_matrix)##

## Call:

## glm(formula = cbind(killed, exposed - killed) ~ dose, family = binomial,

## data = beetles)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -60.717 5.181 -11.72 <2e-16 ***

## dose 34.270 2.912 11.77 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 284.202 on 7 degrees of freedom

## Residual deviance: 11.232 on 6 degrees of freedom

## AIC: 41.43

##

## Number of Fisher Scoring iterations: 4As seen from the summaries for the binomial logistic regression models from each approach, the approaches yield identical results, so it is unimportant which approach is taken.

4.5 Model predictions

4.5.1 Predictions with the formula and coefficients

Values of the dependent variable can be predicted through inputting the coefficient estimates found from the model summaries into the model formulae, given values of the independent variable(s).

To demonstrate predictions using just the model formula and the resulting coefficient estimates, firstly the simple linear regression model with the birth dataset will be used. As a reminder, the model was given as follows, fitted with the lm() function in R.

\[\text{Weight}_i = \beta_0 + \beta_1\text{Age}_i + \epsilon_i\]

The coefficients from the linear model given by the summary() function can then be used to predict the value of the birth weight for a baby at a given gestational age.

##

## Call:

## lm(formula = Weight ~ Age, data = birth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -262.03 -158.29 8.35 88.15 366.50

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1485.0 852.6 -1.742 0.0955 .

## Age 115.5 22.1 5.228 3.04e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 192.6 on 22 degrees of freedom

## Multiple R-squared: 0.554, Adjusted R-squared: 0.5338

## F-statistic: 27.33 on 1 and 22 DF, p-value: 3.04e-05Given the coefficient estimates from the model, the birth weight of a baby for a given gestational age can be predicted using the following formula.

\[ \text{Weight}_i = -1485.0 + 115.5 \times \text{Age}_i\]

Therefore, for example, a baby of gestational age 37.5 weeks, the birth weight is predicted as \[Weight_i=-1485.0 + 115.5 \times 37.5 = 2846.25g.\]

This method of prediction does not just work on simple linear regression models but can be used for regression modelling in general. For example, the multiple regression model for the birth dataset birth_multi_lm1, which has the following formula.

\[ Weight_i =\beta_0 + \beta_1 Sex_i + \beta_2 Age_i + \epsilon_i\]

If for example, you wanted to predict the birth weight of a baby girl at a gestational age of 38 weeks, you would use the following formula.

\[Weight = -1447.24 -163.04\times 1+120.89\times 38 = 2983.54g\]

Exercise: What is the expected birth weight for a baby boy at a gestational age of 39.5 weeks?

This method of prediction also works with generalised linear models using the results from a model fitted with the glm() function. To demonstrate this, the Poisson GLM fitted to the ccancer dataset exploring the relationship between gender and counts of cancer deaths is used. As a reminder, the model summary output is as follows.

##

## Call:

## glm(formula = Count ~ Gender, family = "poisson", data = ccancer)

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 6.621406 0.009422 702.77 <2e-16 ***

## GenderM 0.157000 0.012831 12.24 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 35187 on 29 degrees of freedom

## Residual deviance: 35037 on 28 degrees of freedom

## AIC: 35232

##

## Number of Fisher Scoring iterations: 6Using the resulting coefficient estimates from this model in the formula below to estimate the count of cancer deaths for a given gender. Since the log-link was used for this model, the coefficients require exponentiation in order to transform the log-count to just the count.

\[ \begin{aligned} \log(\hat{\mu}) &= 6.621406 + 0.157000 \times x_1 \\ \hat{\mu} &= \exp( 6.621406 + 0.157000 \times x_1) \end{aligned} \] where \(x_1=0\) if the individual is female and \(x_1=1\) if the individual is male.

Using this formula, the expected count of cancer deaths for women in from the dataset is \(\hat{\mu}=exp( 6.621406 + 0.157000)=879\) and for men is \(\hat{\mu}=exp( 6.621406)=751\).

4.5.2 Predictions with functions

As mentioned in previous sections, you can also predict values using functions, particularly the predict() function. To use this, include the model you wish to predict from as an argument, in addition to values of data you wish to predict the values of the dependent variable from as newdata.

The use of this function is demonstrated in the code below, where for the birth dataset, birth weight is predicted for a range of values from the quadratic model, birth_quad_lm2, fitted with the lm() function.

Firstly, a new dataset needs to be created. This dataset needs to contain the value(s) for which the dependent variable will be predicted using. If for example you wish to estimate the birth weight of a baby with gestational ages 36.5, 37.5 and 38.5, you can create a new dataset containing just these values, as in the code given below.

## Age

## 1 36.5

## 2 37.5

## 3 38.5Then, the predict function can be used, including the name of the model, birth_quad_lm2, and the new dataset to predict from, birth.new1.

The values of these predictions can be given as a table for ease of viewing using the data.table() function in the data.table package. To use this function, include the variables you wish to display as arguments and assign the variables names in the function for clarity.

## Age Weight

## <num> <num>

## 1: 36.5 2733.896

## 2: 37.5 2832.927

## 3: 38.5 2943.894Alternatively, a range of values can be given as the new dataset for assessing the fit of a given model. To demonstrate this, the birth weight will be predicted for a sequence of gestational age values, starting from the minimum observed age to the maximum observed age with 50 values in total.

#made a new dataset

birth.new2 <- data.frame(Age=seq(from = min(birth$Age),

to = max(birth$Age),

length.out = 50))

head(birth.new2)## Age

## 1 35.00000

## 2 35.14286

## 3 35.28571

## 4 35.42857

## 5 35.57143

## 6 35.71429Then, the predict function can be used, including the name of the model, birth_quad_lm2, and the new dataset to predict from, birth.new2.

#use the predict function

birth_predict_quad2 <- predict(birth_quad_lm2, newdata = birth.new2)

birth_predict_quad2## 1 2 3 4 5 6 7 8 9 10 11

## 2607.732 2618.590 2629.693 2641.038 2652.627 2664.460 2676.537 2688.857 2701.421 2714.228 2727.279

## 12 13 14 15 16 17 18 19 20 21 22

## 2740.574 2754.112 2767.894 2781.919 2796.188 2810.701 2825.457 2840.457 2855.700 2871.187 2886.918

## 23 24 25 26 27 28 29 30 31 32 33

## 2902.892 2919.110 2935.572 2952.277 2969.226 2986.418 3003.854 3021.534 3039.457 3057.624 3076.034

## 34 35 36 37 38 39 40 41 42 43 44

## 3094.688 3113.586 3132.727 3152.112 3171.741 3191.613 3211.729 3232.088 3252.691 3273.537 3294.628

## 45 46 47 48 49 50

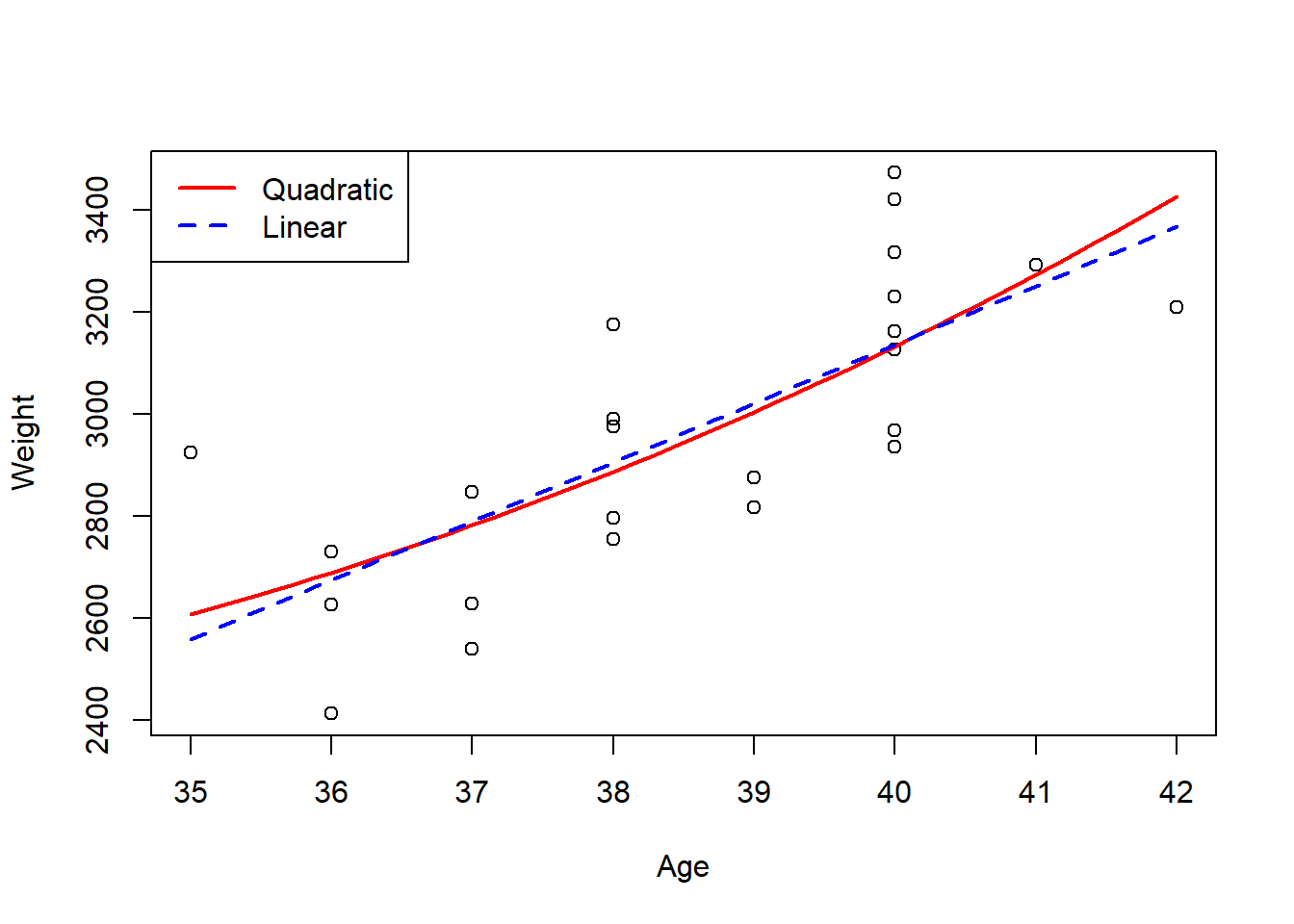

## 3315.961 3337.539 3359.360 3381.424 3403.733 3426.284To demonstrate the fit of this model visually, create a plot depicting the relationship between the two variables being explored, with the predicted values added to the plot. If the model fits the data well, the predicted values line should match the trend of the data well.

For comparative purposes, a line is added for the simple linear regression for this data to demonstrate the difference in fit of the two models and how the quadratic model fits the data better.

#basic plot for relationship between variables

plot(Weight ~ Age, data = birth)

#add lines for each of the sets of predicted values

lines(birth_predict_quad2 ~ birth.new2$Age, col = "red", lty = 1, lwd = 2)

lines(predict(birth_simple_lm, newdata = birth.new2) ~ birth.new2$Age,

col = "blue", lty = 2, lwd = 2)

#add a legend for clarity

legend("topleft", c("Quadratic", "Linear"),

col = c("red", "blue"), lty = c(1,2), lwd = 2)

As with the other method for predictions, this method also works with generalised linear models fitted with the glm() function. To demonstrate this, the binomial logistic regression model fitted to the beetles dataset is used.

For example, predicting in the same way as before but for doses of 1.7 and 1.8, the following code would be used.

#create a new data frame to predict with doses of 1.7 and 1.8

beetles_newdata1 <- data.frame(dose = c(1.7, 1.8))

#predict function

predict(beetles_glm_props, newdata = beetles_newdata1)## 1 2

## -2.4579008 0.9691318However, these predicted values are not what is expected for the probability of death. This is due to the default type of the predict function being type = link which returns predicted values of \(\text{logit}(p(x))\). To return the predicted values of the probabilities instead, type = "response" needs to be added as an argument, as follows.

#predict the probabilities

beetles_predict_glm <- predict(beetles_glm_props, newdata = beetles_newdata1,

type = "response")

beetles_predict_glm## 1 2

## 0.07886269 0.72494641These predictions are much more what you would expect for probabilities, given that they are between 0 and 1. For more information on probability, see Module 5.

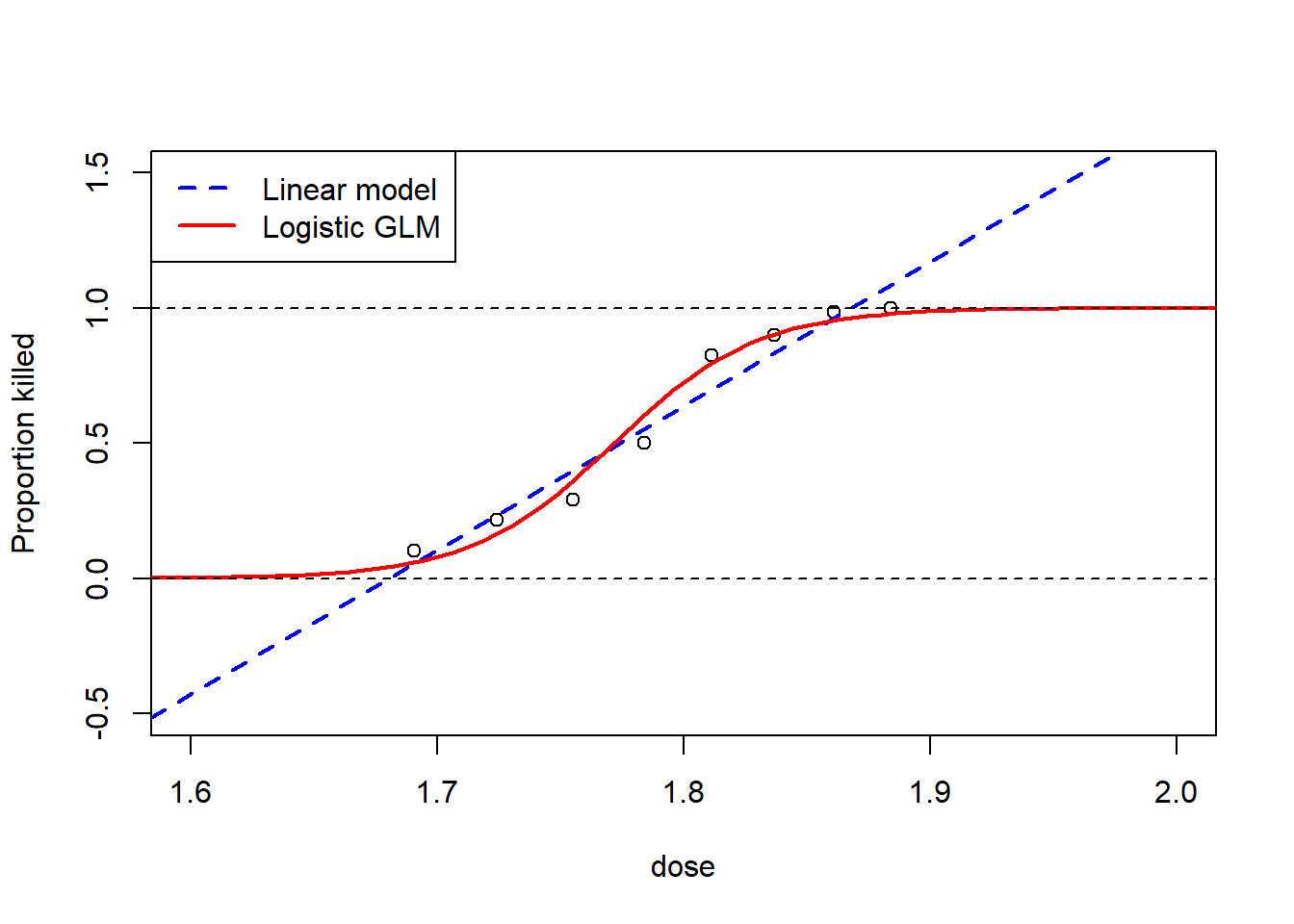

The fit of this GLM can be assessed in the same way as for the models fitted using the lm() function, through predicting values for a wider range of values and fitting the predicted values to a plot. To demonstrate the goodness-of-fit of the GLM and why it is important to fit a GLM over a simple linear regression model, a linear model is fitted with weights added for the number of beetles exposed, with the line of best fit of this linear regression model also added to the plot.

#fit a simple linear regression model for the beetles data

beetles_simple_lm <- lm(prop_killed ~ dose , data = beetles, weights = exposed)

#create a new dataset

beetles_newdata2 <- data.frame(dose = seq(min(beetles$dose) - .2,

max(beetles$dose) + .2,

length = 100))

#plot the relationship between the dosage and proportion of beetles killed

plot(prop_killed ~ dose, data = beetles, ylim = c(-0.5, 1.5), xlim = c(1.6, 2),

ylab = "Proportion killed", xlab = "dose")

#add a line of best fit for the simple linear regression model

abline(beetles_simple_lm, col = "blue", lwd = 2, lty = 2)

#add a line for the predicted values from the GLM

lines(beetles_newdata2$dose, predict(beetles_glm_props,

newdata = beetles_newdata2,

type = "response"), lwd = 2, col = "red")

abline(h = c(0,1), lty = 2)

legend("topleft", c("Linear model", "Logistic GLM"), lty = c(2,1), lwd = 2,

col = c("blue", "red"))

It is clear to see from this plot that the GLM fits much better than the linear model, given that for the linear model, at a dosage of 1.6, the proportion of beetles killed is actually negative, and that for a dosage of 1.9, the proportion of beetles killed is greater than 1, neither are logistically possible proportions. However, for the GLM, the proportion of beetles killed is always between the values of 0 and 1. For example, for the GLM, at a dosage of 1.6, instead of having a negative proportion, the proportion is just very close to 0 meaning that it is unlikely for any beetles to be killed, with the opposite occurring at a dosage of 1.9 where the proportion is very close to 1. Beyond the linear model not being realistically feasible for certain values of dosage, the line of best fit also does not fit the trend of the observed points as well as the GLM.

4.6 Model selection

4.6.1 Accuracy and precision

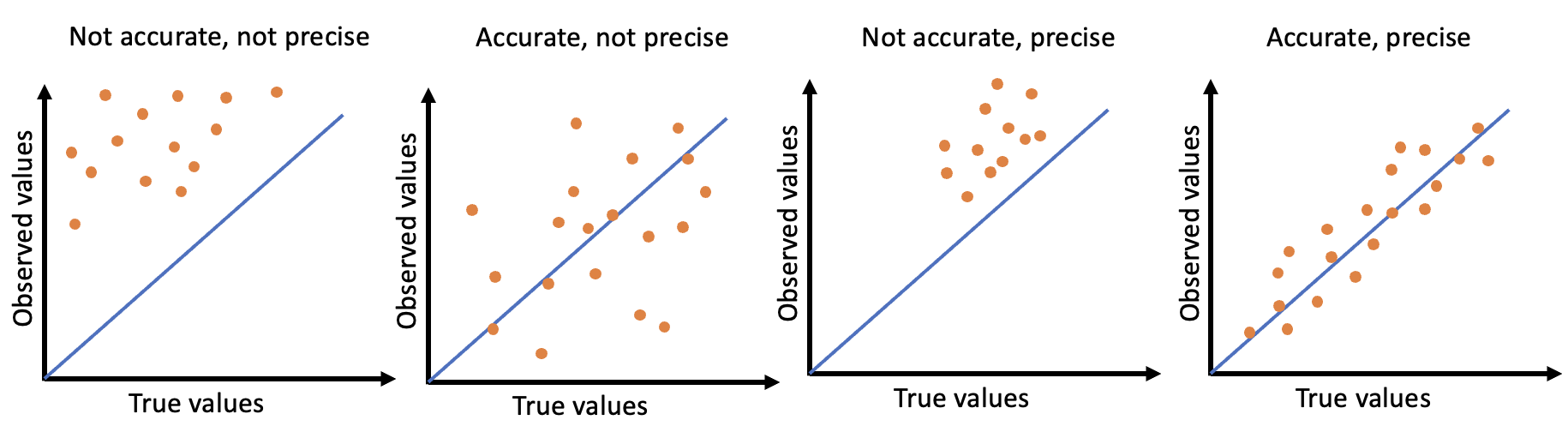

One of the main goals when modelling is to produce the best fitting models with the least amount of error. One type of error is observational error, made up of accuracy and precision, and can be used to measure results. Accuracy can be defined as the distance between the observed/estimated results and the true values, and precision can be defined as the spread of the observed/estimated results. In an ideal situation, you would want the observations to be close together and close to the true values.

A visual representation of accuracy and precision can be seen in the figure below, where the graphs depict the 4 different combinations of the observational error types.

(#fig:image accuracy and precision)Visual examples of accuracy and precision

There are multiple ways of testing the accuracy and precision of a model, for example the Akaike information criterion which assesses the goodness-of-fit of a model, which could be described as assessing the comparative accuracy when paired with at least one other model. This method and others are be discussed in this module.

4.6.2 Akaike information criterion

The Akaike information criterion (AIC) estimates the proportional quality of one model compared to another through assessing the quality predictions. It uses the bias-variance trade-off to identify which model is preferred for the given data, taking into consideration the accuracy of the model’s predictions via the log-likelihood and the complexity of the model via a penalty term for the number of parameters within the model. Formally, the AIC is given as \[\text{AIC}=-2\ell + 2p,\] where \(\ell\) is the log-likelihood of a model and \(p\) is the number of parameters in the model.

Given that the AIC statistic is essentially a measure of both bias and variance, when comparing two models fitted to the same data, the model with the smallest AIC value is the better fitting model for the data.

To find the AIC statistic in R for a given model, the value can found using the function AIC() with the desired model included as an argument, or if fitting a GLM, the function summary() provides the AIC statistic in the output.

(#fig:image AIC)Reading the AIC statistic from a GLM sumamry

To demonstrate the AIC() function, the AIC statistics can be found for both the linear and quadratic regression models for the birth weight data as follows.

## [1] 324.53## [1] 321.3909The AIC statistic for the more complex model is smaller, supporting previously made conclusions that the multiple regression model is a better fitting regression model for the birth weight data compared to the simple linear regression model.

4.6.3 Bayesian information criterion

The Bayesian information criterion (BIC), is an alternative information to the AIC,and also uses the bias-variance trade-off. Unlike the AIC however, it uses the number of observations in the dataset in the computation of the penalty term. As a result of this difference, the BIC has a larger penalty term than the AIC and penalises complex models more than the AIC. The formula for the BIC is given below.

\[\text{BIC}=-2\ell + p \times \log(n),\] where \(\ell\) is the log-likelihood of a model, \(p\) is the number of parameters in the model and \(n\) is the number of observations in the dataset.

To compute the BIC statistic in R for a given model, the BIC() function can be used in much the same way as for computing the AIC statistic. Demonstrated below with the birth weight data.

## [1] 328.0642## [1] 326.1031These results agree with previous conclusions, that the more complex model, the multuple regression model, fits the data best given that the BIC value for this model is smaller.

Given the differences in the AIC and BIC, for a smaller dataset, the AIC might be more appropriate since it doesn’t penalise the more complex models as harshly. However, if the dataset is large, then the BIC may be more appropriate for preventing overfitting.

4.6.4 R-squared statistic

The \(R^2\) or R-squared statistic, also called the coefficient of determination, is a measure of goodness-of-fit of a given regression model through measuring the proportion of variance from the dependent variable that is explained by the independent variable(s).

There are two main components of the R-squared statistic, the sum of squares of the residuals and total sum of squares, both of which measure variation in the data, where squared values are used to account for fitted values being both above and below the true values.

To compute these measures of variation, let \(\boldsymbol{y}=y_1,...,y_n\) be a dataset with corresponding fitted values \(\boldsymbol{\hat{y}}=\hat{y}_1,...,\hat{y}_n,\). The residuals can be described as the estimates of unobservable error, or the difference between the observed and fitted values, then given as \(r_i= y_i - \hat{y}_i\).

The sum of squares of the residuals (the sum of the squared distance between the observed values and the fitted values) is computed through summing the squared values of the residuals as follows: \[RSS = \sum_{i=1}^n (y_i-\hat{y}_i)^2 = \sum_{i=1}^n r_i^2.\]

The total sum of squares (the sum of the squared distance between the observed values and the overall mean) follows the same structure as follows: \[TSS = \sum_{i=1}^n (y_i - \bar{y})^2,\] where \(\bar{y}= \sum_{i=1}^n y_i\) is the overall mean of the observed values.

The R-squared statistic is then computed using the following formula: \[ R^2 = 1-\frac{RSS}{TSS},\] and takes a value between \(0\) and \(1\).

Given that the better fitting a model is, the smaller the difference between the observed and fitted values is and hence the smaller the RSS value is, a better fitting model will have a larger R-squared value, with the perfectly fitting model having an RSS value of 0 and an R-squared value of 1. Therefore, when comparing the goodness-of-fit of two or more models, the model with the R-squared statistic value closest to 1 is the better fitting model for the given data.

The values for the R-squared statistics from models in R can be found directly through using the summary() function using the code summary()$r.squared.

For example, this function can be used with the linear and quadratic regression models for the welding data to find out which model fits the data better.

## [1] 0.9622726## [1] 0.9761461Whilst both of the R-squared statistics are high, the value for the quadratic model is slightly higher, supporting previous conclusions that the quadratic model fits the data better and hence the quadratic model should be used.

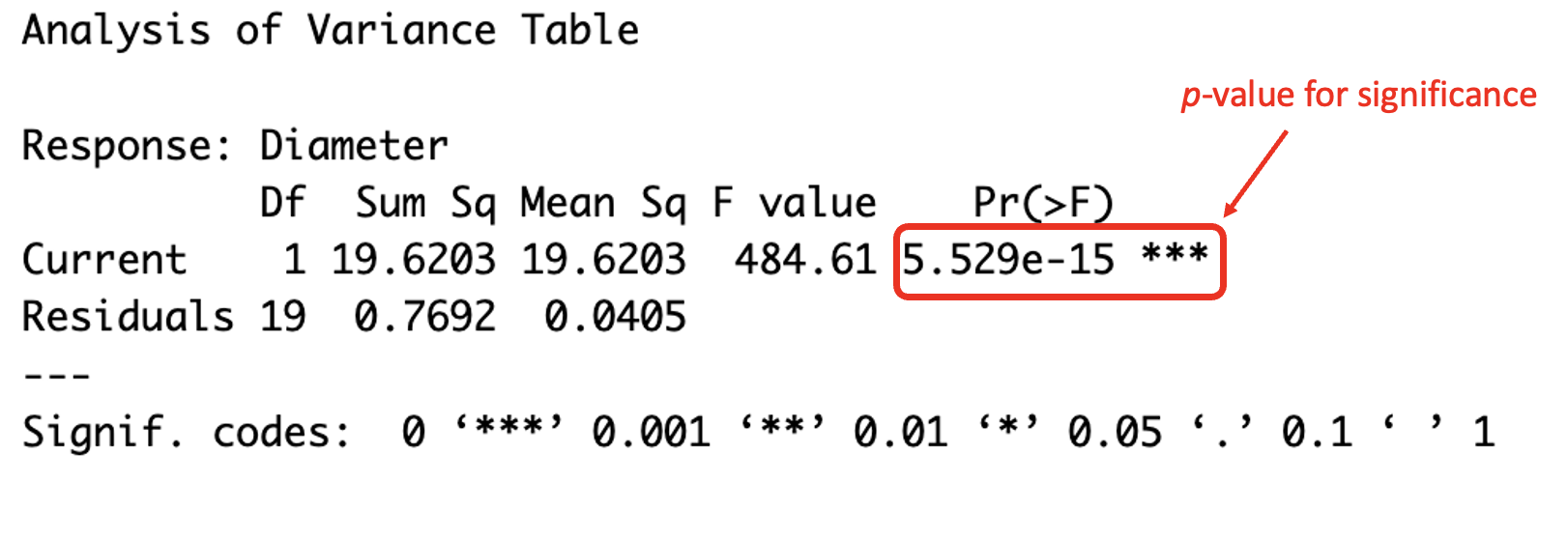

4.6.5 Analysis of variance

Analysis of variance (ANOVA) is a statistical test used to assess the relationship between the dependent variable and one or more independent variables.

To produce an ANOVA table, the function anova() can be used in R. If only one model is given as the argument, it will indicate as to whether the terms in the given model are significant. This is demonstrated below with the simple linear model for the welding data.

## Analysis of Variance Table

##

## Response: Diameter

## Df Sum Sq Mean Sq F value Pr(>F)

## Current 1 19.6203 19.6203 484.61 5.529e-15 ***

## Residuals 19 0.7692 0.0405

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(#fig:image ANOVA 1)Understanding the ANOVA table

It can be seen from the results that the p-value is very small, and much smaller than the standard 5% significance level indicating that current does have an impact on diameter, and therefore the term for current should remain in the model.

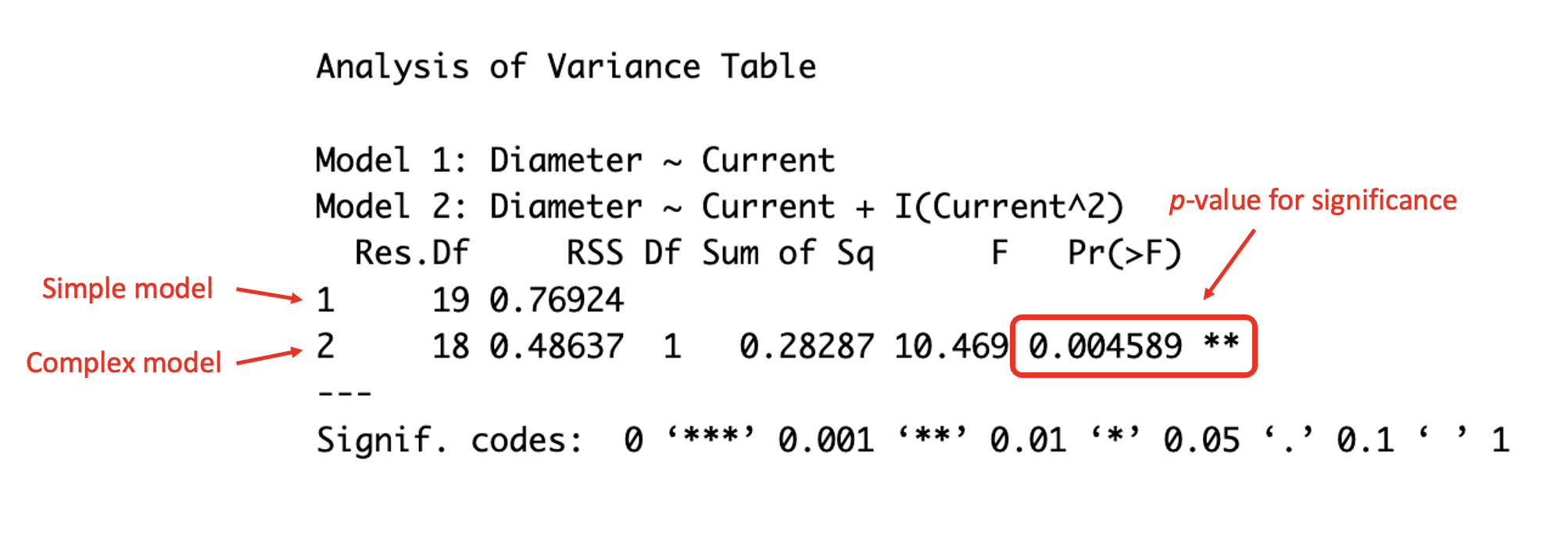

However, you can also test which model fits the data best by including multiple models as arguments. This is demonstrated in the R code below, with a comparison between the simple linear model and quadratic model for the welding dataset.

#produce an ANOVA table for the simple and quadratic models for the welding data

anova(weld_simple_lm,weld_quad_lm)## Analysis of Variance Table

##

## Model 1: Diameter ~ Current

## Model 2: Diameter ~ Current + I(Current^2)

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 19 0.76924

## 2 18 0.48637 1 0.28287 10.469 0.004589 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(#fig:image ANOVA 2)Understanding the ANOVA table

The p-value given in the ANOVA table is smaller than \(0.05\), which is the typical significance value chosen, indicating that there is evidence that the addition of the quadratic term is significant. Therefore, there is evidence that the quadratic model better explains the relationship between the diameter and current comparatively to the simple model.

4.6.6 Likelihood ratio testing

Likelihood ratio testing can be used to compare the fit of two models when the models are nested. In other words, if one model is a special case of the other where at least one parameter is removed from the model. The likelihood ratio test (LRT) helps to decide whether to reject the null hypothesis or not where the null hypothesis assumes that the nested model is at least as good as the more complex model.

The likelihood ratio test (LRT) statistic can be computed using the following formula \[ \lambda = - 2 \times (\ell(\text{model 1}) - \ell(\text{model 2})),\] where model 1 is nested in model 2 and \(\hat{\ell}()\) is the log-likelihood for the model given in the brackets.

To perform the likelihood ratio test, a constant, \(c\), is chosen to determine the significance level of the test. If the corresponding p-value to \(\lambda\) is less than \(c\), then there is evidence to reject the null hypothesis and the complex model is preferred, otherwise, if the p-value is greater than or equal to \(c\), there is evidence to not reject the null hypothesis and the nested model is preferred.

The LRT can be conducted manually, through first computing the value of \(\lambda\) through using the function logLik() in the equation with an argument for the chosen model to compute the log-likelihood of the chosen model.

#compute lambda

llmod1 <- logLik(birth_simple_lm)

llmod2 <- logLik(birth_multi_lm1)

lambda <- -2*(llmod1 - llmod2)The corresponding p-value can then be computed through using the function pchisq() which computes the chi-squared distribution function for the arguments included. In this case, to compute the p-value, input the value of \(\lambda\), the degrees of freedom (the difference between the degrees of freedom for the nested and complex models, given by the logLik() function) and set lower.tail = FALSE as arguments.

## [1] 0.02339251Given that the resulting p-value is less than \(0.05\), the most common significance level, there is evidence that the more complex model is preferred and that the null hypothesis should be rejected.

Alternatively, the function lrtest() within the package lmtest can be used to perform a likelihood ratio test. An example of this with the birth dataset is as follows.

#perform a likelihood ratio test on the simple and multiple regression models

lrtest(birth_simple_lm, birth_multi_lm1)## Likelihood ratio test

##

## Model 1: Weight ~ Age

## Model 2: Weight ~ Sex + Age

## #Df LogLik Df Chisq Pr(>Chisq)

## 1 3 -159.26

## 2 4 -156.69 1 5.1391 0.02339 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1It can be seen that the results from the function lrtest() are the same as computing the LRT manually, with the p-values being the same. Once again, there is therefore evidence that the null hypothesis should be rejected and that the more complex, multiple regression model is preferred, supporting the evidence of the AIC results.

The final most common way that a LRT can be done in R is through using the anova() function again, but this time specifying test = "LRT" as an additional argument.

## Analysis of Variance Table

##

## Model 1: Weight ~ Age

## Model 2: Weight ~ Sex + Age

## Res.Df RSS Df Sum of Sq Pr(>Chi)

## 1 22 816074

## 2 21 658771 1 157304 0.02514 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This method is conducted slightly differently, hence the slight variation in the p-value, however, the test is also valid and also indicates that the more complex model is preferred.

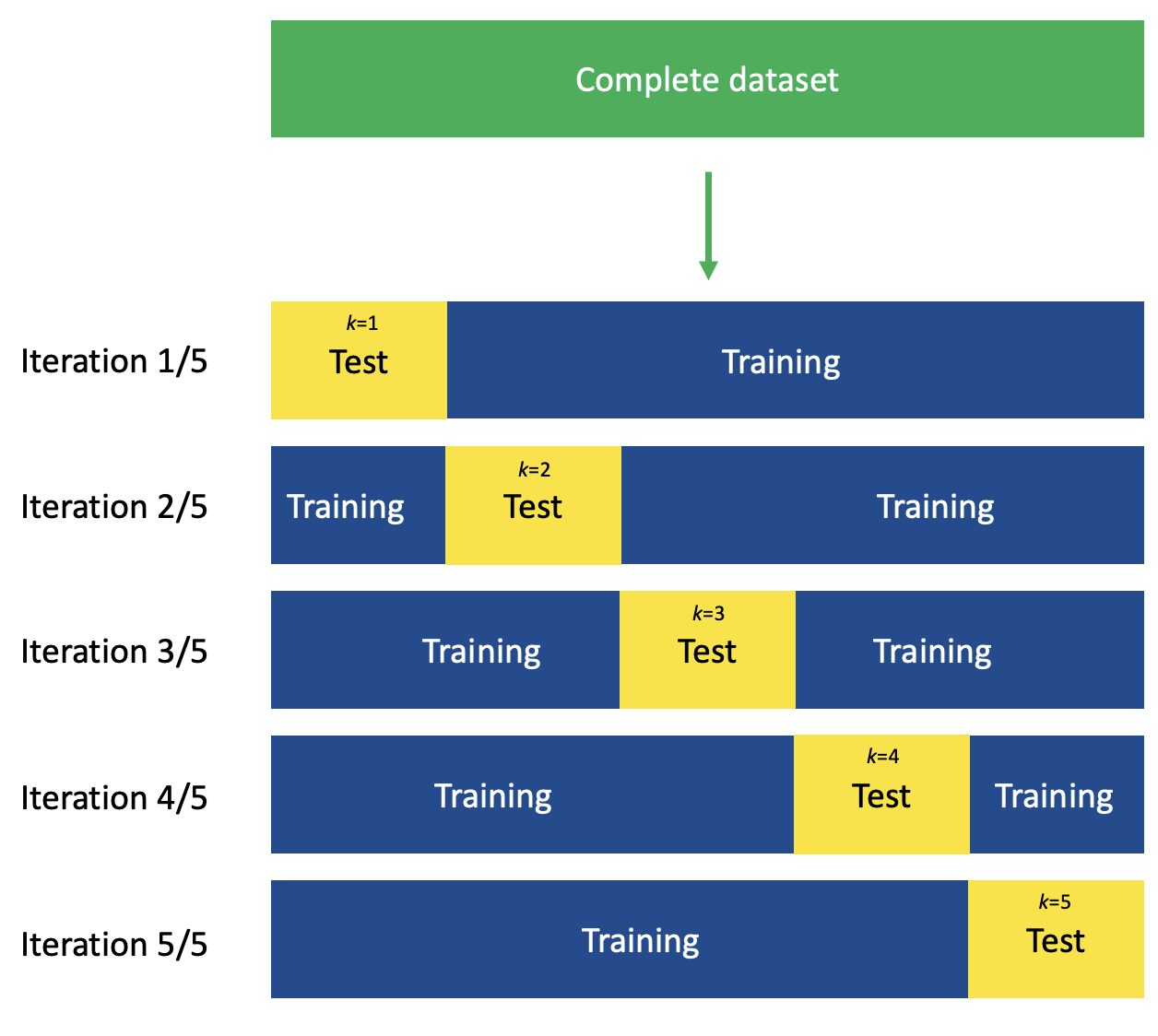

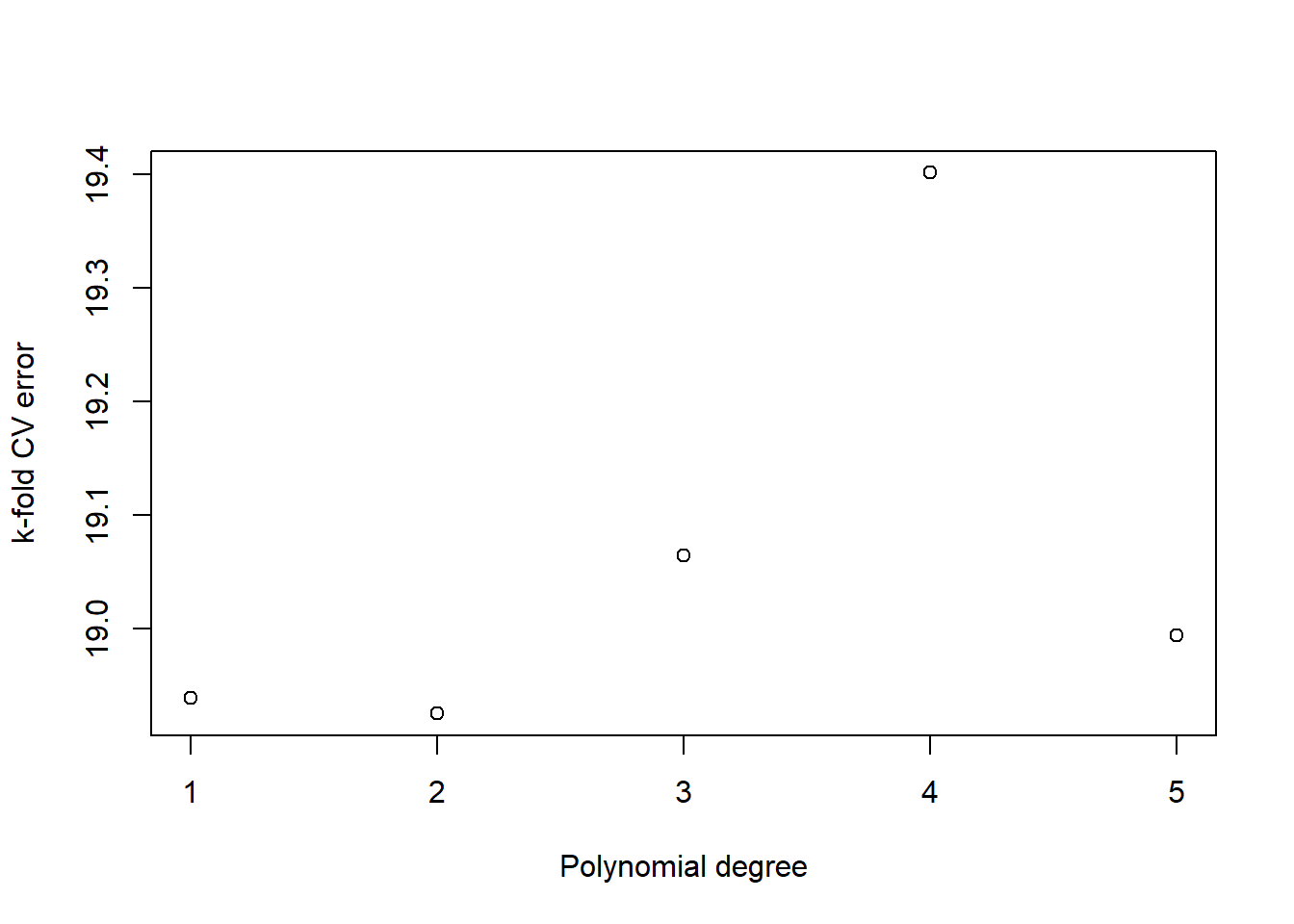

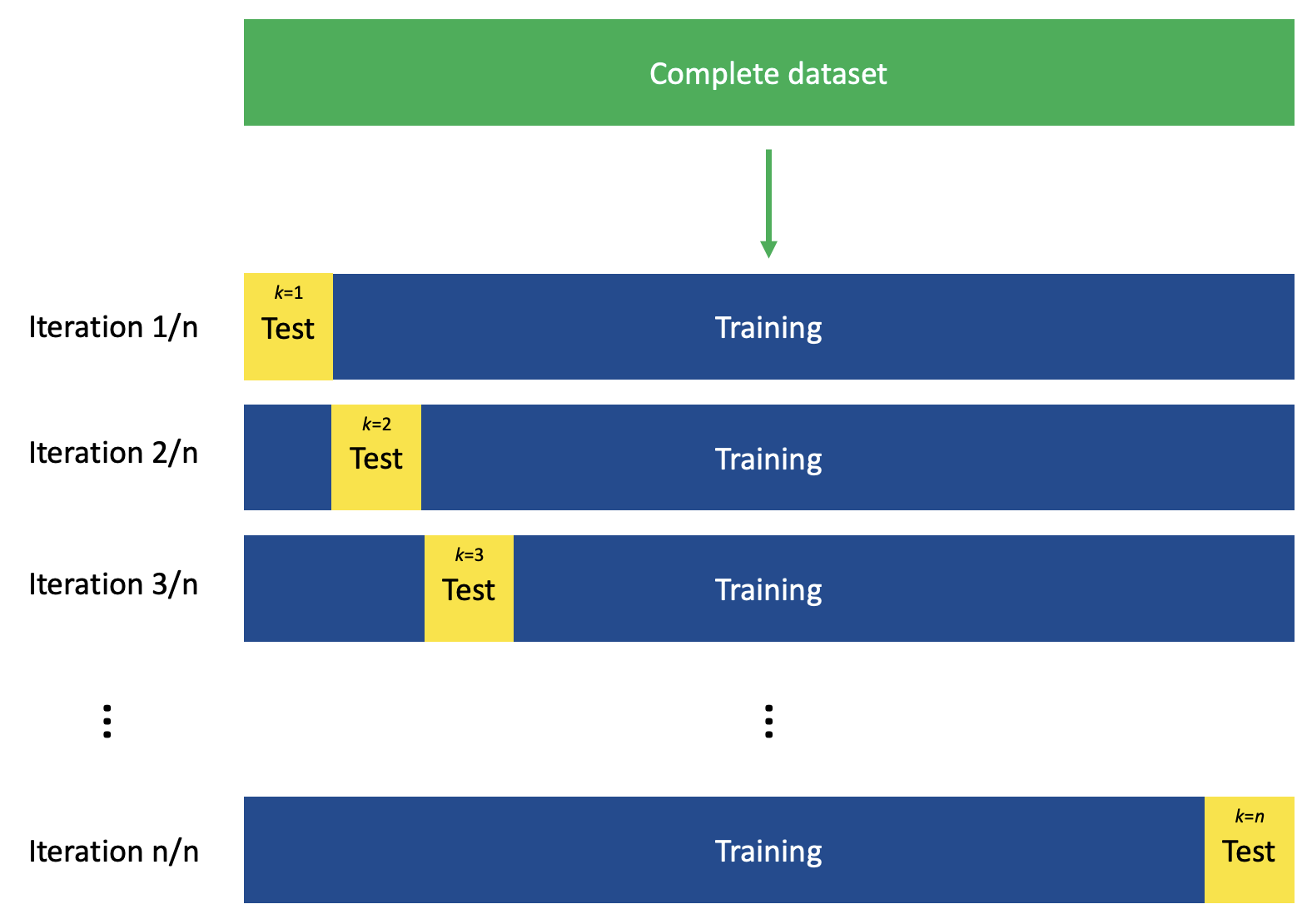

4.7 Stepwise regression

It is always important to find out which model fits the data best. When the data only has one or two covariates, using the methods discussed so far this module, it can be a simple process of fitting each of the models and using the evaluation methods to select the best fitting model. However, once the data has more than a couple covariates available, this process becomes more lengthy. This is when stepwise regression comes in useful, although it does not guarantee to select the best model.

This regression is a step-by-step iterative regression that looks at how the fit of a model changes when a variable is added or removed (depending on which direction you go in), testing the significance of the variable, in an automated process to select the best model with the data available. There are three approaches that can be taken with stepwise regression, the first being the way that people typically take manually and that is forward stepwise regression. For the forward approach, the process starts with the intercept-only model, adding one term at a time, testing its significance and keeping that term in the model if it is significant. The second approach is backward stepwise regression. For the backward approach, the same idea is used but the process starts with the saturated (full) model, removing terms one at a time and testing whether that term was significant through its impact on the model and the model’s fit. Finally is the bidirectional, or both-ways stepwise regression, which is a combination of both forward and backward regression to test which terms should be included or excluded. This is done by starting with the intercept-only model and adding sequentially adding terms that are deemed statistically significant, and after each new term is added, any terms which are no longer statistically significant are removed.

There are multiple ways of conducting stepwise regression in R, however, the most common approach is to use either the function stepAIC() from the MASS package or the function step() from the stats package, both functions used in the same way (although step() is a simplified version of stepAIC()). These methods of stepwise regression use the AIC by default to choose the best fitting model, with a model (either a lm() or glm() object) inputted as the object argument and the direction used chosen by adding the argument direction = and inputting one of "both", "backward" or "forward" as the direction of choice.

To fit the full or saturated model in R, instead of needing to type out each of the terms manually, after the tilde (~), you can put a full stop (.) in place of the terms which will add main effects for each covariate available in the data.

The mtcars dataset from the datasets package used introduced in Module 2 will be used to demonstrate the different approaches to stepwise regression given that there are many covariates available in the data. To find out more about the dataset itself, search for the mtcars help file with ?mtcars.

#'mtcars' is a data set available in the 'datasets' package with data on

#11 different aspects of auto mobiles for 32 auto mobiles from the 1974 Motor

#Trend US magazine

library(datasets)

#information on the dataset in the 'Help' pane

?mtcars

#load data and assign to 'cars_data'

cars_data <- mtcars4.7.1 Forward stepwise regression

To perform forward stepwise regression, you need to start from the intercept-only model as terms are added sequentially. Therefore, the first step is to fit the intercept-only model to the data.

##

## Call:

## lm(formula = mpg ~ 1, data = cars_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -9.6906 -4.6656 -0.8906 2.7094 13.8094

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 20.091 1.065 18.86 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6.027 on 31 degrees of freedomThen, to use the stepAIC() function, the direction argument needs to be specified as direction = "forward" and the range of models (lower and upper) to be assessed specified in the scope argument. If no scope argument is specified, the initial model is used as upper model, so to explore more than just the intercept-only model with forward stepwise regression, the initial model should be included as the lower scope and the saturated model as the upper scope. Therefore, the saturated model should also be fitted prior to performing the stepwise regression

##

## Call:

## lm(formula = mpg ~ ., data = cars_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.4506 -1.6044 -0.1196 1.2193 4.6271

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 12.30337 18.71788 0.657 0.5181

## cyl -0.11144 1.04502 -0.107 0.9161

## disp 0.01334 0.01786 0.747 0.4635

## hp -0.02148 0.02177 -0.987 0.3350

## drat 0.78711 1.63537 0.481 0.6353

## wt -3.71530 1.89441 -1.961 0.0633 .

## qsec 0.82104 0.73084 1.123 0.2739

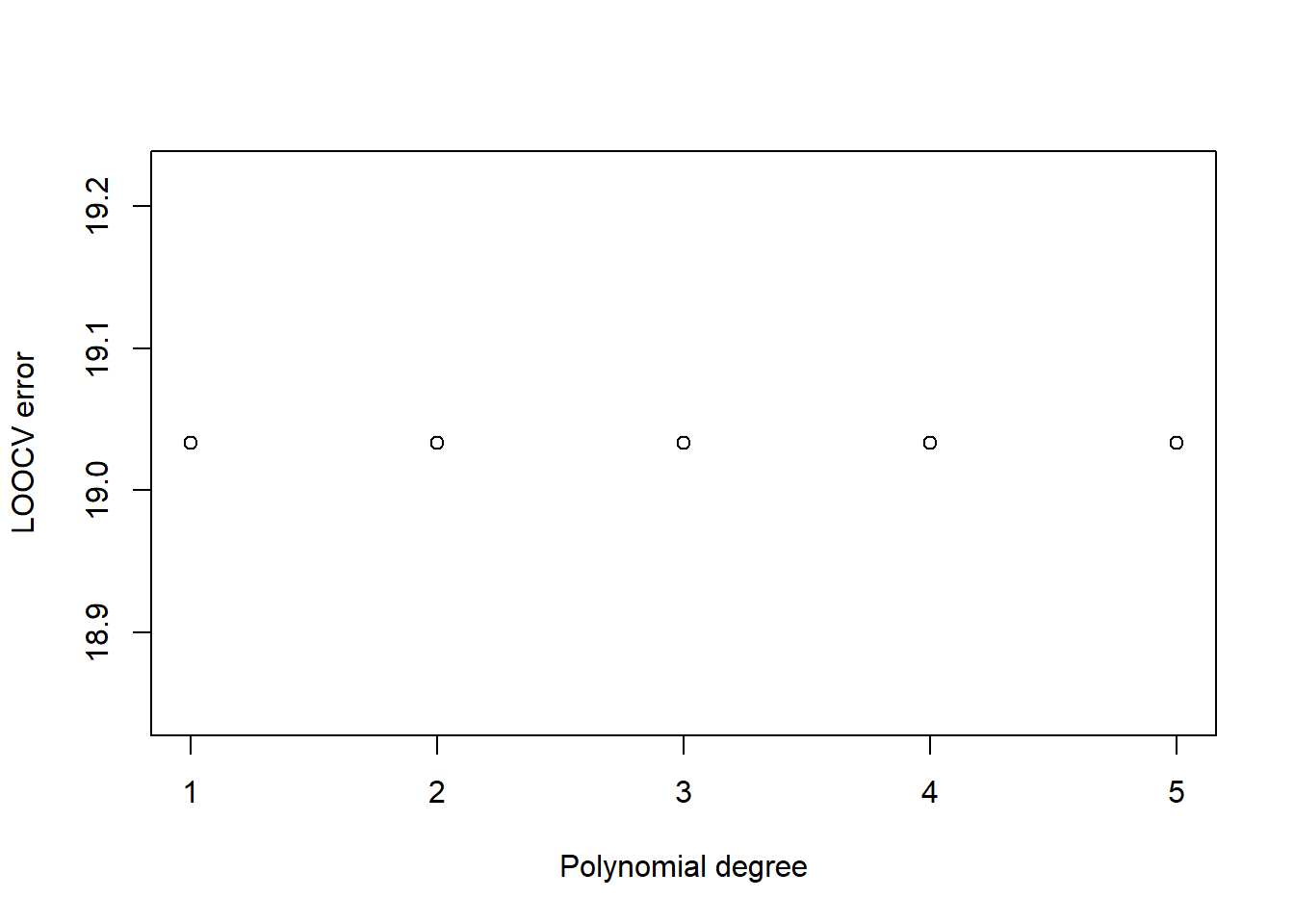

## vs 0.31776 2.10451 0.151 0.8814